Nvidia’s Tesla Midrange

- RRP $159

- April 17th 2007

- Purchase November 2024

- Purchase Price £10.00

Introduction – The GeForce 8000 Series

In November 2006, Nvidia launched the G80 architecture, debuting as the GeForce 8 series.

This generation marked a shift in GPU design, introducing Nvidia’s first unified shader architecture and breaking away from the traditional fixed-function pipeline that had defined graphics cards since the earliest days of 3D acceleration.

The G80 was not Nvidia’s first brush with unified shaders; they had previously contributed to the RSX “Reality Synthesizer” in Sony’s PlayStation 3, which blended elements of fixed and programmable pipelines

At the heart of this revolution was the G80 GPU, powering the flagship GeForce 8800 GTX. With 128 stream processors, support for DirectX 10, and a robust 384-bit memory interface, the 8800 GTX set a new standard for high-end gaming and professional graphics.

It was the first consumer GPU to fully embrace Shader Model 4.0, allowing developers to craft more complex visual effects with greater efficiency.

Beyond raw power, the 8000 series introduced 2nd-generation PureVideo HD, enhancing video playback with hardware acceleration for H.264 and VC-1 formats. It also laid the foundation for CUDA, NVIDIA’s parallel computing platform, which would later transform scientific research and AI development.

While ATI’s HD 2000 series was making its own unified shader debut, NVIDIA’s GeForce 8000 lineup arrived earlier and with more polish, earning widespread acclaim for its performance, driver stability, and architectural foresight.

ATI vs Nvidia: Shader Efficiency and Design

The Unified shader architectures allowed both ATI and Nvidia to assign processing power where needed which was a huge advance over earlier designs. The two companies approached the challenge differently:

- ATI TeraScale/HD2000: Implemented fewer, but wider SIMD shader units. Each ATI “stream processor” was highly efficient, able to parallelize workloads better for certain compute tasks, meaning ATI cards often delivered higher performance-per-watt than their Nvidia rivals of the time. For example, the HD 2600 variants had 120 stream processors; the HD 2900 XT had 320. The architecture’s ability to assign any shader processor to any task, and its optimizations for efficiency, sometimes resulted in superior performance in shader-heavy applications despite lower raw hardware counts. used a vector-based VLIW design where shader units were tightly integrated with the core. This meant the shader units shared the same clock speed as the GPU core, so no separate shader clock was needed.

- Nvidia Tesla/G80: Focused on having more individual shader units, but each was less wide and specialized than ATI’s. This approach led to higher raw peak throughput but could result in lower efficiency under real-world loads, especially those requiring extensive parallelism. Nvidia’s design sometimes excelled in brute-force scenarios but could lag in efficiency per shader, particularly in DirectX 10 titles at the time. Nvidia introduced a scalar stream processor design where shaders operated independently from the core. To optimize performance, Nvidia gave shaders their own clock domain shader clock which could run faster than the core clock.

Think of ATI shader units as crew teams working together handling multiple pixels simultaneously. Nvidia shader units for comparison, are individual workers with superhuman strength.

Comparing the actual numbers of shader units is like comparing hours worked by teams vs hours worked by individuals, numbers alone don’t tell the whole story without knowing the size and power of each worker or team.

In summary, the ATi cards sound better with their vast numbers of shader units but this doesn’t always translate into better performance.

Nvidia’s G80 Lineup Overview

With codenames like G86, G84, and G80, the GeForce 8 series also spanned three key performance tiers:

- G86 (GeForce 8400): Aimed at entry-level users, this chip offered basic DirectX 10 support and improved video playback via Nvidia’s first-generation PureVideo HD, though gaming performance was modest.

- G84 (GeForce 8600): Targeted the mid-range market, balancing gaming capability with multimedia features. It introduced hardware-accelerated H.264 and VC-1 decoding, making it a solid choice for HTPC builds.

- G80 (GeForce 8800): The flagship architecture, debuting with the 8800 GTX and GTS. It featured a wide 384-bit memory bus, up to 128 stream processors, and full DirectX 10 support — but lacked dedicated video decode hardware, relying instead on the CPU for HD playback.

Despite the 8800 GTX’s dominance in raw performance and its role as a DirectX 10 pioneer, early drivers and high power draw made the G80 a complex beast for enthusiasts. Its lack of full video decode acceleration mirrored ATI’s R600, leaving both flagships reliant on brute force rather than media finesse.

The Card

This was another Ebay purchase, this one a reference Nvidia design so no factory overclock or other shenanigans.

It looks nice enough though and you can’t argue with the price either, no box I’m afraid.

Below is where my version of the 8600GT sits in the great table of 8000 series Nvidia GPU’s, being a reference design it fits in exactly where you may think:

My version has the lower 256Mb of RAM on board but it least it’s GDDR3, it seems that the higher capacity cards often have the slower VRAM.

Here’s the obligatory GPU’z screenshot:

The Test System

I used the Phenom II X4 for XP testing, not the most powerful XP processor by any means but its 3.2Ghz clock speed should be more than enough to get the most out of mid-2000s games, even if all four of its cores are unlikely to be utilised by most titles.

The full details of the test system:

- CPU: AMD Phenom II X4 955 3.2Ghz Black edition

- 8Gb of 1866Mhz DDR3 Memory (showing as 3.25Gb on 32bit Windows XP and 1600Mhz limited by the platform)

- Windows XP (build 2600, Service Pack 3)

- Kingston SATA 240Gb SSD as a primary drive, an AliExpress SATA has the Win7 installation and games on.

- ASRock 960GM-GS3 FX

- The latest supported driver version 6.14.13 from Nvidia’s website

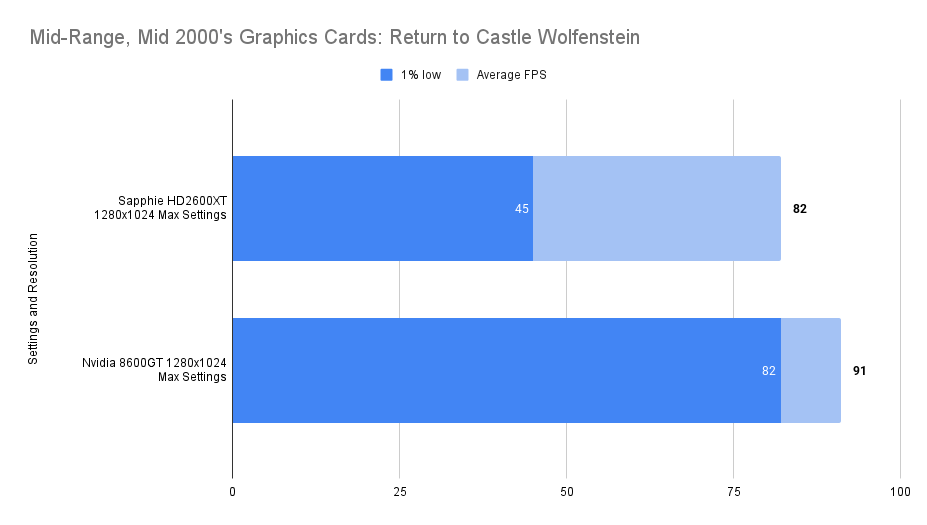

Return to Castle Wolfenstein (2001)

Game Overview:

Return to Castle Wolfenstein launched in November 2001, developed by Gray Matter Interactive and published by Activision.

Built on a heavily modified id Tech 3 engine, it’s a first-person shooter that blends WWII combat with occult horror, secret weapons programs, and Nazi super-soldier experiments.

The game features a linear campaign with stealth elements, fast-paced gunplay, and memorable enemy encounters.

The engine supports DirectX 8.0 and OpenGL, with advanced lighting, skeletal animation, and ragdoll physics.

The Framerate is capped at 92fps

Performance Notes:

the GeForce 8600GT easily takes this old game in it’s stride. The FPS counter was pinned at 91fps for the majority of the run. If there were no frame limit then I’m confident that the average framerate would be well into triple digits.

The brute force nature of the G84 cores are clearly better suited than the HD2600XT at processing this old Open GL title.

Mafia (2002)

Game Overview:

Mafia launched in August 2002, developed by Illusion Softworks and built on the LS3D engine. It’s a story-driven third-person shooter set in the 1930s, with a focus on cinematic presentation, period-authentic vehicles, and a surprisingly detailed open world for its time. The engine uses DirectX 8.0 and was known for its lighting effects and physics, though it’s a bit temperamental on modern systems.

It’s more of a compatibility test these days than a performance one. The game has a hard frame cap of 62 FPS built into the engine, which makes benchmarking a little odd. Still, it’s a good title to check how older GPUs handle legacy rendering paths and driver quirks.

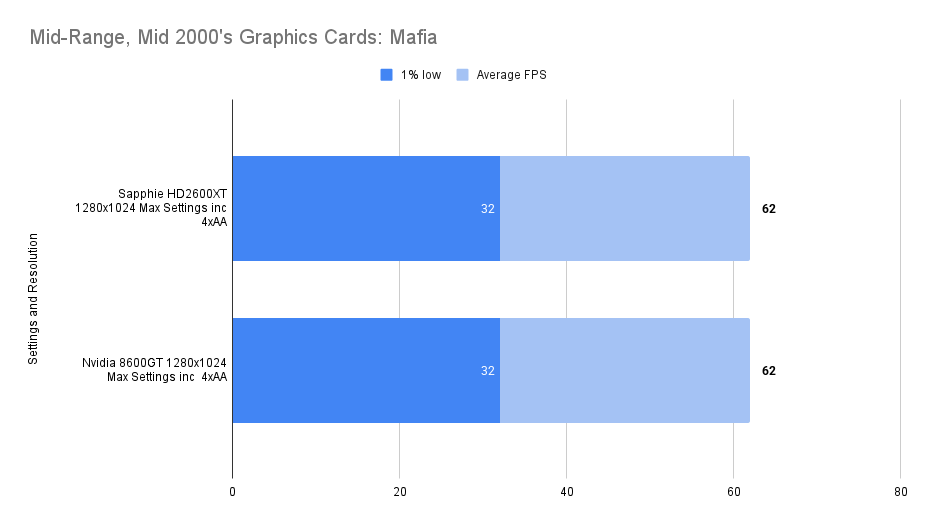

Performance Notes:

On the frame cap of course, no surprise there, I would say that the 1% low is disappointing but it matches exactly what we got from the HD2600XT so I guess this is probably as good as it possibly gets playing this old game on this new(er) hardware.

Not the sort of result that makes a good benchmarking article anyway but there you go.

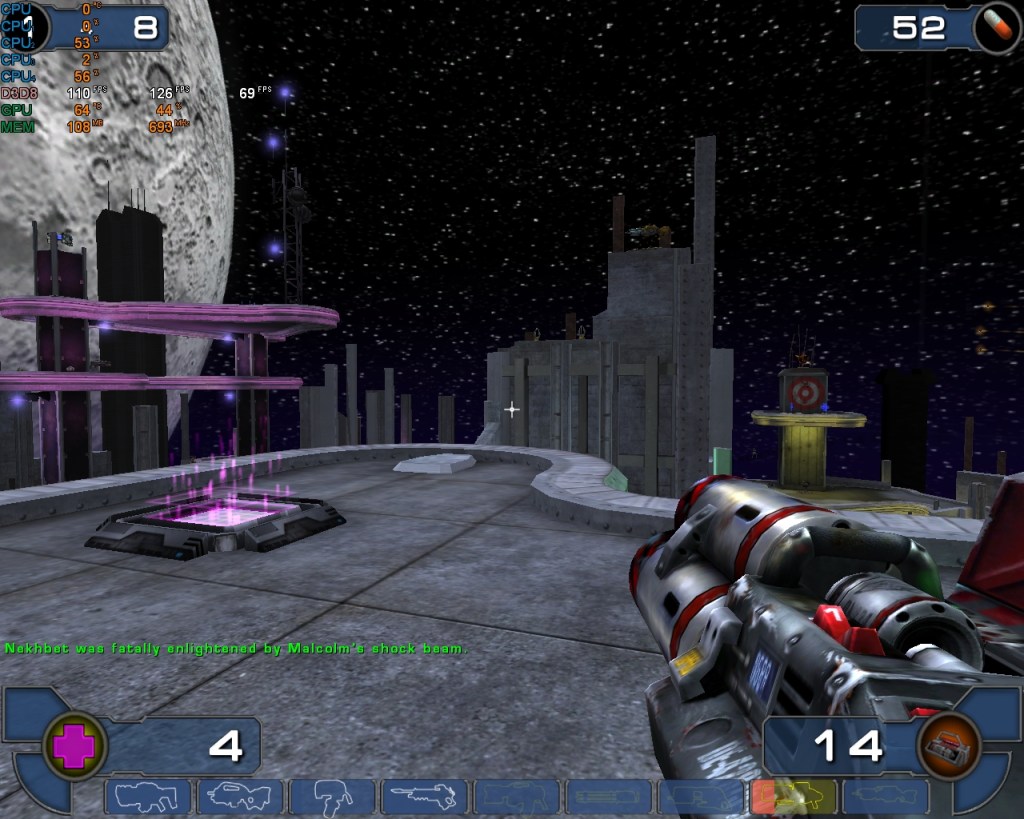

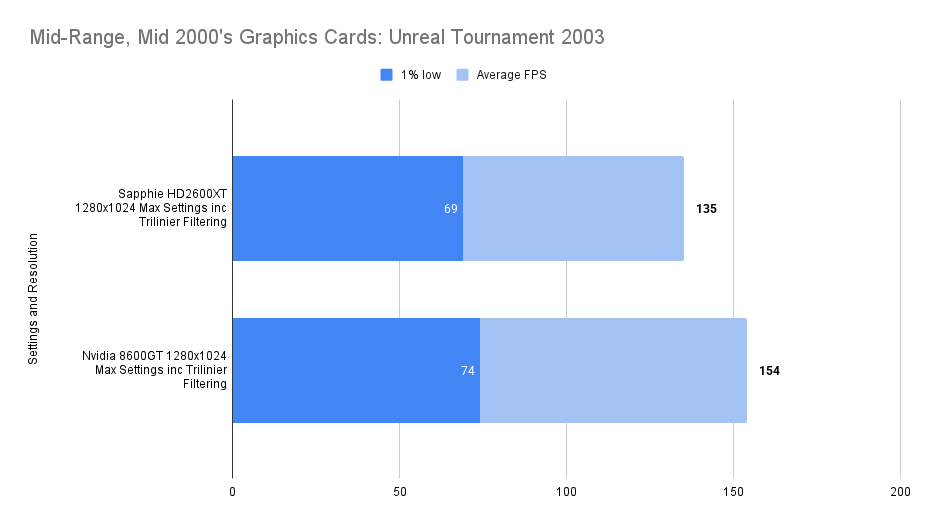

Unreal Tournament 2003 (2002)

Game Overview:

Released in October 2002, Unreal Tournament 2003 was built on the early version of Unreal Engine 2. It was a big leap forward from the original UT, with improved visuals, ragdoll physics, and faster-paced gameplay.

The engine used DirectX 8.1 and introduced support for pixel shaders, dynamic lighting, and high-res textures all of which made it a solid test title for early 2000s hardware.

We played a lot of this and 2004 at LAN parties back in the day.

Still a great game and well worth going back to, even if you’re mostly limited to bot matches these days. There’s even a single-player campaign of sorts, though it’s really just a ladder of bot battles.

The game holds up visually and mechanically, and it’s a good one to throw into the testing suite for older cards. The uncapped frames are pretty useful (and annoyingly rare) on these old titles.

The 8600GT performs well in this game as should be expected. It does edge out a win over the HD2600XT but you wouldn’t notice, especially when using an old 75Hz monitor.

Still, a win is a win and and least it shows a difference.

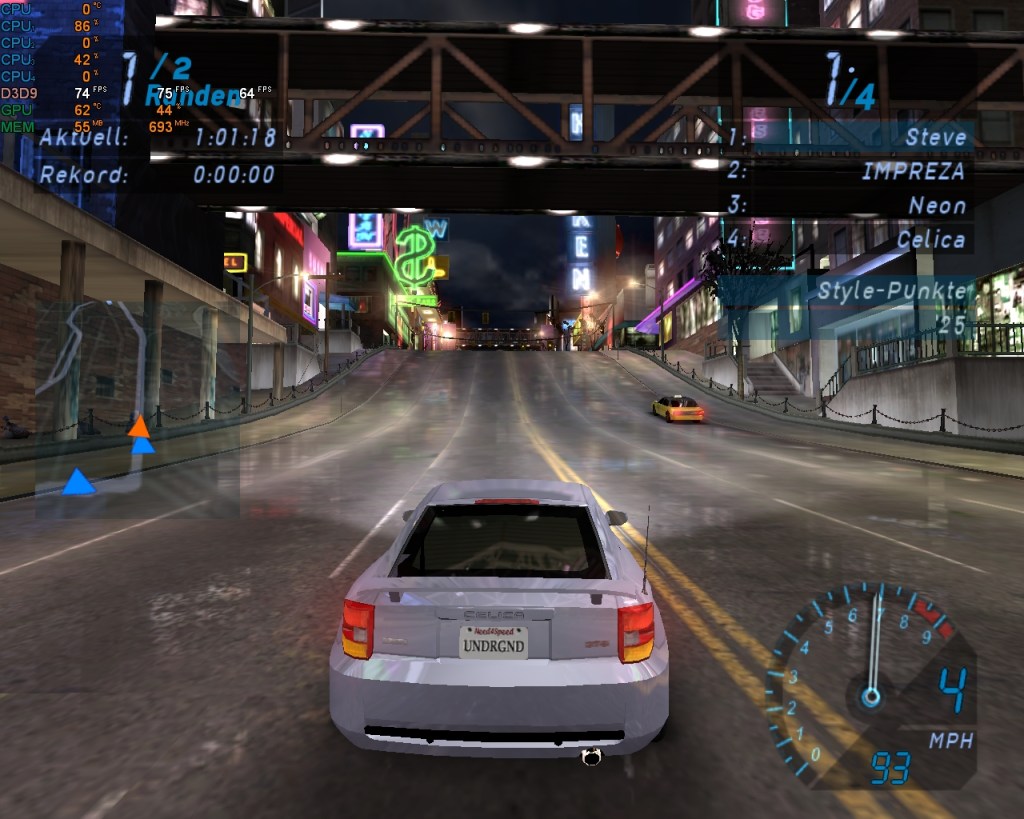

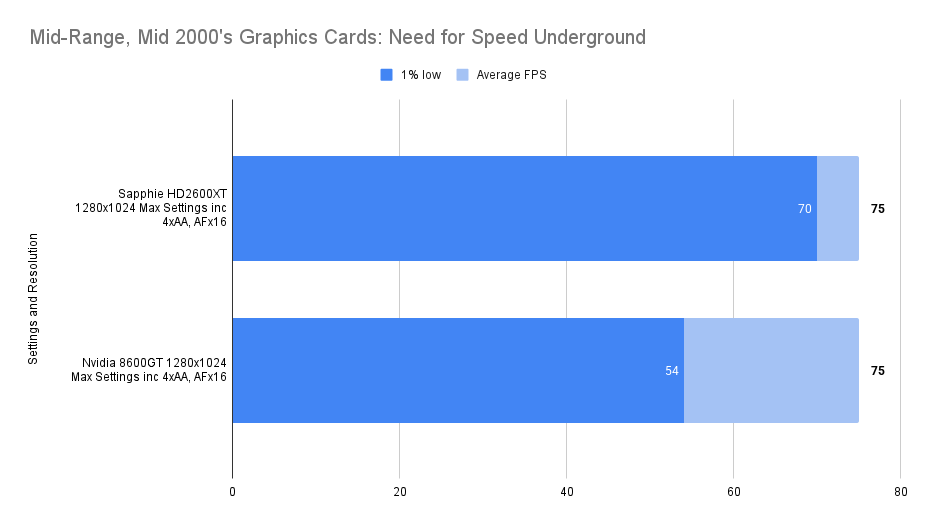

Need for Speed Underground (2003)

Game Overview:

Released in November 2003, Need for Speed: Underground marked a major shift for the series, diving headfirst into tuner culture and neon-lit street racing.

Built on the EAGL engine (version 1), it introduced full car customisation, drift events, and a career mode wrapped in early-2000s flair.

The game runs on DirectX 9 but carries over some quirks from earlier engine builds.

Apparently v1.0 of this game does have uncapped frames but mine installs a later version right off the disk.

Performance Notes:

It’s a constant pain having performance limited in these earlier titles. Would be great to know how many frames these later cards could throw out, but there are game-engine reasons why they don’t.

75 FPS feels silky smooth though, and the game is every bit as fun as I remember it being back in the day.

We’ll there’s an upset, I was expecting a slight improvement on 1% low or perhaps boring identical figures but it seems that the Nvidia has the lower 1% Low in this game.

Obviously both cards are stuck to the enforced Vsync 75fps limit.

Doom 3 (2004)

Game Overview:

Released in August 2004, Doom 3 was built on id Tech 4 and took the series in a darker, slower direction. It’s more horror than run-and-gun, with tight corridors, dynamic shadows, and a heavy focus on atmosphere. The engine introduced unified lighting and per-pixel effects, which made it a demanding title for its time, and still a good one to test mid-2000s hardware.

The game engine is limited to 60 FPS, but it includes an in-game benchmark that can be used for testing that doesn’t have this limit.

Ultra settings are locked unless you’ve got 512 MB of VRAM, so that’s off the table here.

Performance Notes:

The Nvidia 8600GT consistently outperforms the Sapphire HD2600XT across all tested resolutions and anti-aliasing settings.

The performance gap is most noticeable at lower resolutions, particularly at 1024×768 with no anti-aliasing, where the 8600GT leads by nearly 44%.

Anti-aliasing significantly impacts both cards, but the HD2600XT suffers more, with frame rate drops between 35% and 47%, compared to 30% to 36% for the 8600GT.

At 1280×1024 with 4xAA, both cards fall below 50 FPS, though the 8600GT maintains a modest lead.

A clear win for the 8600GT but the difference reduces with every hike in resolution and detail. Perhaps if I had a monitor that could handle higher resolutions, the ATi card could get a win, but likely the framerate would have dropped to unplayable levels at that time.

FarCry (2004)

Game Overview:

Far Cry launched in March 2004, developed by Crytek and built on the original CryEngine. It was a technical marvel at the time, with massive outdoor environments, dynamic lighting, and advanced AI. The game leaned heavily on pixel shaders and draw distance, making it a solid stress test for mid-2000s GPUs. It also laid the groundwork for what would later become the Crysis legacy.

At 800 x 600 with very high settings, both cards perform very well with triple digit framerates and smooth 1% low results.

The 8600GT gave the much greater average framerate but had the lowest dips.

Still a win for the Nvidia card.

Smooth gameplay for both cards at 1024 x 768, the 8600GT having the edge on all settings though. the HD2600 seems more consistent with it’s frame times with the 1% Low figures forming a higher proportion of the average framerate.

the highest resolution tested at the very high preset, both would probably be considered ‘playable’ with 1% Lows staying over 30 but the 8600GT wins out with a 67% higher average framerate and 65% 1% Low figure.

F.E.A.R. (2005)

Game Overview:

F.E.A.R. (First Encounter Assault Recon) launched on October 17, 2005 for Windows, developed by Monolith Productions. Built on the LithTech Jupiter EX engine, it was a technical showcase for dynamic lighting, volumetric effects, and intelligent enemy AI. The game blended tactical shooting with psychological horror, earning acclaim for its eerie atmosphere and cinematic combat.

The Nvidia 8600GT consistently outperforms the Sapphire HD2600XT across all tested configurations. At 1024×768 with maximum settings and 4xAA/16xAF, the 8600GT delivers nearly double the minimum and average frame rates. When anti-aliasing is disabled, both cards improve significantly, but the 8600GT still leads by a wide margin—especially in average FPS, where it reaches 105 compared to the HD2600XT’s 69.

At 1152×864 with 4xAA/16xAF and soft shadows off, the HD2600XT struggles with a minimum of 10 FPS, while the 8600GT maintains a more playable 24 FPS. Turning soft shadows on further stresses both cards, but the HD2600XT drops to a minimum of 8 FPS, whereas the 8600GT holds steady at 24.

The HD2600XT’s performance is heavily impacted by anti-aliasing and soft shadows, often dipping into single-digit minimums. The 8600GT, while not flawless, demonstrates better resilience under load and smoother gameplay across the board.

Battlefield 2 (2005)

Game Overview:

Battlefield 2 launched on June 21, 2005, developed by DICE and published by EA. It was a major evolution for the franchise, introducing modern warfare, class-based combat, and large-scale multiplayer battles with up to 64 players. Built on the Refractor 2 engine, it featured dynamic lighting, physics-based ragdolls, and destructible environments that pushed mid-2000s hardware.

Performance Notes:

Without AA both cards perform similarly on average (99 vs 95 FPS), but the HD 2600XT shows a slightly higher median FPS, suggesting smoother frame pacing. However, the 8600GT has a tighter spread between average and 1% low, indicating more consistent performance.

With 4x AA the 8600GT dramatically outperforms the HD 2600XT in average FPS (83 vs 52), with a much smaller drop from no-AA performance. The HD 2600XT suffers a steep decline, losing nearly half its average FPS.

Need for Speed: Carbon (2006)

Game Overview:

Need for Speed: Carbon hit the streets on October 30, 2006, developed by EA Black Box and published by Electronic Arts. As a direct sequel to Most Wanted, it shifted the franchise into nighttime racing and canyon duels, introducing crew mechanics and territory control. Built on the same engine as its predecessor but enhanced for dynamic lighting and particle effects, Carbon pushed mid-2000s GPUs with dense urban environments, motion blur, and aggressive post-processing.

Performance Notes

At 1280×1024 with max settings and no AA, the 8600GT delivers a respectable 26 FPS average, trailing the HD2600XT slightly but maintaining smoother frame pacing with a tighter 10 FPS spread. It’s not a brute-force performer at high resolutions, but it holds its own in consistency.

Drop to 1024×768 and the 8600GT finds its stride — 38 FPS average at max settings, matching the HD2600XT’s pace and even edging it out in 1% lows (24 vs 23). This resolution sweet spot showcases the card’s balance between fill rate and shader throughput.

But the real surprise comes with 4x AA enabled:

- The 8600GT posts a 38 FPS average, more than double the HD2600XT’s 16 FPS.

- Its 1% low of 27 FPS suggests stable frame delivery even under AA load.

- In contrast, the HD2600XT collapses to a 2 FPS spread, revealing a severe AA bottleneck.

This makes the 8600GT a standout for AA-heavy titles in the DX9 era — its architecture and driver stack clearly handle multi-sample workloads with greater efficiency.

At medium settings, the HD2600XT surges ahead with a 104 FPS average, but the 8600GT still delivers a solid 82 FPS. However, its 1% low of 28 FPS hints at occasional dips, possibly due to memory bandwidth constraints or less aggressive driver-level buffering.

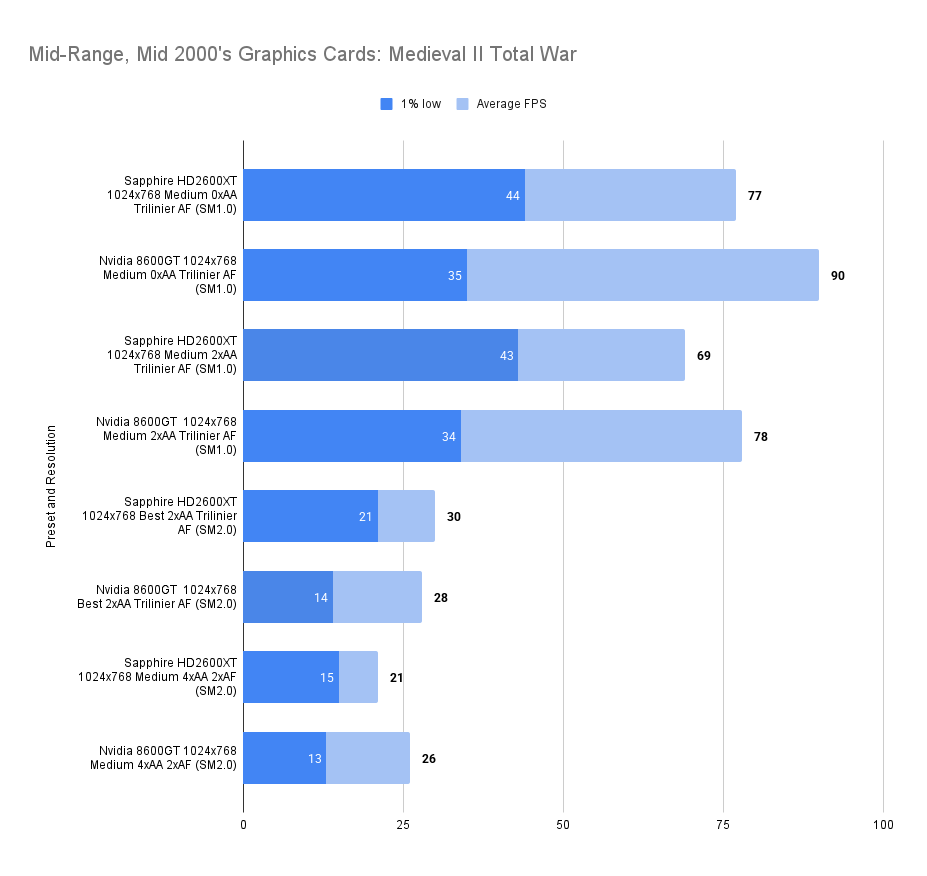

Medieval II: Total War (2006)

Game Overview:

Released on November 10, 2006, Medieval II: Total War was developed by Creative Assembly and published by Sega. It’s the fourth entry in the Total War series, built on the enhanced Total War engine with support for Shader Model 2.0 and 3.0. The game blends turn-based strategy with real-time battles, set during the High Middle Ages, and includes historical scenarios like Agincourt.

Performance Notes:

The 8600GT shows a clear edge in average FPS across all settings, especially under SM1.0 where it outpaces the HD2600XT by 13 FPS at medium settings with no AA, and 9 FPS with 2xAA. Its AA scaling is notably efficient, with only modest drops in performance as anti-aliasing increases — a trait that reinforces its reputation for strong ROP and memory handling.

Under SM2.0, both cards dip into the 20–30 FPS range, but the 8600GT maintains a slightly higher average and a wider spread, suggesting better peak performance in lighter scenes.

The 1% lows, however, reveal a trade-off: the 8600GT’s frame pacing is less consistent than the HD2600XT’s in some scenarios, particularly at SM1.0 where dips to 35 FPS contrast with the ATI card’s steadier 44.

Test Drive Unlimited (2006)

Game Overview:

Released on September 5, 2006, Test Drive Unlimited was developed by Eden Games and published by Atari. It marked a major technical leap for the Test Drive franchise, built on the proprietary Twilight Engine, which supported streaming open-world assets, real-time weather, and Shader Model 3.0 effects. The game ran on DirectX 9, with enhanced support for HDR lighting and dynamic shadows, optimized for both PC and seventh-gen consoles.

At launch, TDU was praised for its ambitious scale, vehicle fidelity, and online integration, though some critics noted AI quirks, limited damage modeling, and performance bottlenecks on lower-end rigs. The PC version especially benefited from community mods and unofficial patches that expanded car libraries and improved stability.

I always have fun playing this one, I certainly don’t miss the voice acting in the NFS series.. those street racers need to take themselves a little less seriously.

It looks great as as well, the HD2600XT does a pretty good job at Max settings but again, Anti-Aliasing tanks the framerate – it needs to stay off.

Performance Notes:

In Test Drive Unlimited, the 8600GT asserts a clear lead in both average FPS and 1% lows across most tested configurations. At 1024×768 High settings with 0xAA, it delivers a smooth 61 FPS average, outpacing the HD2600XT by +4 FPS and maintaining tighter frame pacing — a testament to Nvidia’s efficient memory controller and well-optimized driver stack for streaming terrain engines.

Under 4xAA, the 8600GT’s performance remains impressively resilient, dropping only 9–13 FPS depending on resolution, while the HD2600XT suffers more dramatic dips, especially at 1280×1024, where its average plunges to 18 FPS. This reinforces the 8600GT’s reputation for strong ROP throughput and anti-aliasing scalability, particularly in deferred lighting scenarios.

The 1% lows further highlight Nvidia’s edge: while the HD2600XT dips into single digits under 4xAA, the 8600GT sustains playable lows in the 34–36 FPS range, even at higher resolutions. This consistency makes it a more reliable choice for collectors seeking smooth cruising across Oʻahu’s open roads.

Overall, the 8600GT’s blend of stable frame delivery, efficient AA handling, and driver-level polish makes it the preferred card for TDU’s streaming-heavy engine.

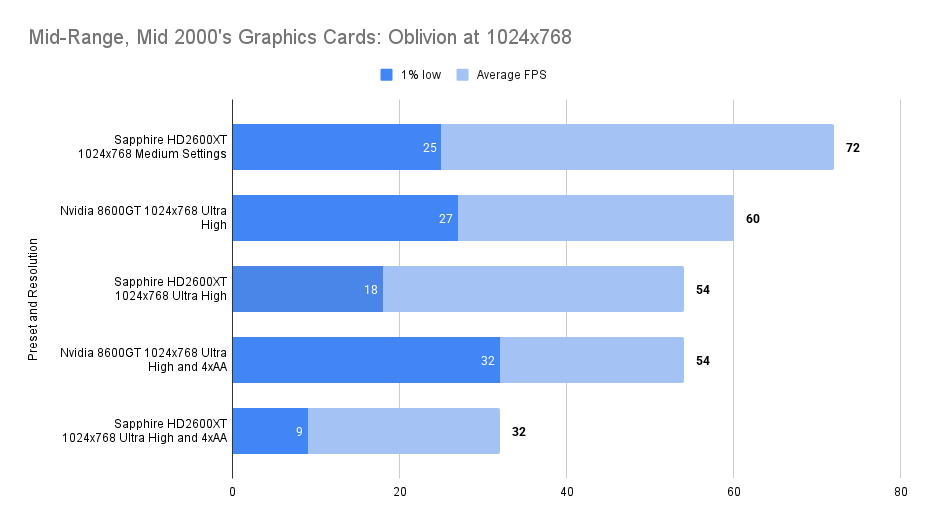

Oblivion (2006)

Game Overview:

Oblivion launched on March 20, 2006, developed by Bethesda Game Studios. Built on the Gamebryo engine, it introduced a vast open world, dynamic weather, and real-time lighting. The game was a technical leap for RPGs, with detailed environments and extensive mod support that kept it alive well beyond its release window (it’s just had a re-release recently).

Known for its sprawling world Oblivion remains a benchmark title for mid-2000s hardware. The game’s reliance on draw distance and lighting effects makes GPUs struggle.

Performance Notes

At 800×600, the 8600GT delivers its most impressive results. Medium settings yield a silky average of 91 FPS with a solid 30 FPS 1% low,

Even at Ultra High, the card holds steady with a 72 FPS average and no drop in 1% lows, showing remarkable consistency.

When 4xAA is enabled, performance dips to 64 FPS average, but the 1% low actually rises slightly to 32 FPS—suggesting the card handles edge smoothing without major stutter at this resolution.

Compared to the HD2600XT, the 8600GT consistently leads in frame stability and responsiveness

At 1024×768, the 8600GT continues to impress, though the performance gap begins to show. Medium settings average 80 FPS with a 1% low of 32, still comfortably playable and responsive.

Ultra High settings drop the average to 60 FPS, with a 1% low of 27—noticeable in combat-heavy areas but not game-breaking. With 4xAA enabled, the average falls to 54 FPS, but the 1% low rebounds to 32, indicating that anti-aliasing doesn’t introduce severe stutter at this resolution.

The HD2600XT trails behind in both average and low-end performance, making the 8600GT the more stable choice for this tier.

At 1280×1024, the 8600GT begins to show its age. Ultra High settings yield a modest 46 FPS average, with a 1% low of 29, playable, but with occasional dips in dense environments like forests or cities.

Enabling 4xAA at this resolution pushes the card to its limits, dropping the average to 38 FPS and the 1% low to 17.

The HD2600XT struggles even more here, with lower averages and harsher dips, reinforcing the 8600GT’s edge in maintaining smoother gameplay under pressure.

Crysis (2007)

Game Overview:

Crysis launched in November 2007 and quickly became the go-to benchmark title for PC gamers. Built on CryEngine 2, it pushed hardware to the limit with massive draw distances, dynamic lighting, destructible environments, and full DirectX 10 support.

Performance Notes:

Medium settings at 800 x 600 is where you need to be to have a somewhat playable experience. In this game, input latency is awful at lower framerates making it hard to play.

The two cards are neck and neck at this resolution, with just a margin of error difference between them.

Comparable results also at 1024 x 768, a suprise ‘win’ for the HD 2600XT when AA is enabled, not that it was at all playable.

Another close result at 1280 x 1024, these two cards really are reasonably matched whilst playing this game.

A loss then for our 8600GT, but not by so much that you would actually notice.

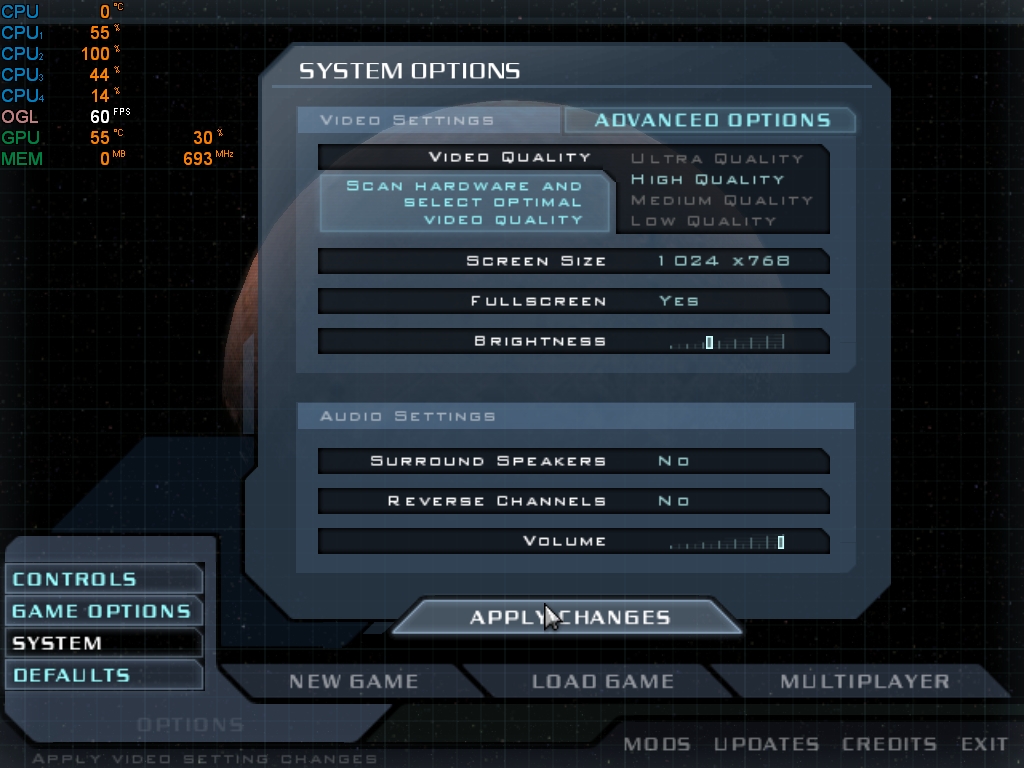

S.T.A.L.K.E.R. (2007)

Game Overview:

Released in March 2007, S.T.A.L.K.E.R.: Shadow of Chernobyl was developed by GSC Game World and runs on the X-Ray engine. It’s a gritty survival shooter set in the Chernobyl Exclusion Zone, blending open-world exploration with horror elements and tactical combat. The engine supports DirectX 8 and 9, with optional dynamic lighting and physics that can push older hardware to its limits.

Performance Notes

In S.T.A.L.K.E.R., the GeForce 8600GT delivers a performance profile shaped by two invisible ceilings: a stubborn 75 FPS frame cap and a 256MB VRAM saturation point. Despite toggling the limiter off, the game remains locked at 75 FPS, turning every average into a ceiling test and every 1% low into the true measure of smoothness.

At 1024×768 Medium with Static Lighting, the 8600GT hits the limiter cleanly: 74 FPS low, 75 FPS average. Frame pacing is flawless, and the card shows no signs of stress. The HD2600XT matches the average but trails slightly in lows, hinting at Nvidia’s tighter driver-level delivery in simpler lighting scenarios.

Stepping up to Max settings with Low AA and Static Lighting, the 8600GT again posts a 74/75 split, proving that baked lighting and AA overhead pose no threat. The HD2600XT, however, drops to 41/67, revealing a 26 FPS gulf in lows and a clear struggle to maintain smooth delivery under heavier load. The 8600GT’s ability to hold the cap here speaks to its efficient shader pipeline and memory controller—ideal for static-lighting engines.

I thought I would test at 1280×1024 Max and Low AA and Static Lighting, to see if the card could continue to knock out 75fps.

Finally we found the limit though and the card had to do some work. The 8600GT posts 33 FPS lows and 68 FPS average, still below the cap, but impressively close given the resolution bump.

This suggests the card’s ROPs and memory bandwidth are well-tuned for higher-res static scenes, even if the lows hint at occasional stutter.

The real stress test comes with Dynamic Lighting. At 1024×768 Max settings with Dynamic Lighting switched on, the 8600GT’s average dips to 38 FPS, with a 1% low of 11 FPS.

This exposes the card’s limits in real-time shadow rendering, where fill-rate and shader complexity collide. But the real culprit? VRAM saturation.

Afterburner shows the 256MB buffer fills within seconds and never recovers, confirming that dynamic lighting pushes the card beyond its memory bandwidth.

Still, the 8600GT outpaces the HD2600XT’s 14/27 result (also on the VRAM limit), offering a 27 FPS lead in average and a more playable experience overall. The lows are harsh, but the card holds its ground better than its rival.

Assassins Creed (2007)

Game Overview:

Assassin’s Creed launched in November 2007, developed by Ubisoft Montreal and built on the Anvil engine. It introduced open-world stealth gameplay, parkour movement, and historical settings wrapped in sci-fi framing. The first entry takes place during the Third Crusade, with cities like Damascus, Acre, and Jerusalem rendered in impressive detail for the time.

Performance Notes:

In Assassin’s Creed, the GeForce 8600GT delivers a nuanced performance profile, marked by close competition with the HD2600XT and a consistent edge in post-processing scenarios.

Unlike S.T.A.L.K.E.R., VRAM usage here remains modest, peaking at 175MB (at least at 1024×768), well below the 8600GT’s 256MB ceiling. This leaves headroom for effects and texture loads, helping explain the card’s smoother delivery under full graphical load.

At 1024×768 with mid-range settings the 8600GT posts a 41 FPS low and 60 FPS average, trailing the HD2600XT’s 45/67 by a narrow margin.

Still, the 8600GT maintains playable lows and respectable averages, with no signs of stutter or memory strain.

When pushed to gigher settings, the 8600GT flips the script: 35 FPS low and 52 FPS average, edging ahead of the HD2600XT’s 31/49.

This reversal hints at Nvidia’s stronger handling of high-detail geometry and texture filtering. The difference is subtle, but consistent, a sign of architectural efficiency under heavier load.

The real highlight comes with Multisampling 3/3 and Post FX enabled added on. Here, the 8600GT posts a 31 FPS low and 44 FPS average, outpacing the HD2600XT’s 25/37.

This 7 FPS lead in average and 6 FPS in lows marks the card’s best relative showing, and suggests that Nvidia’s ROPs and post-processing pipeline are better suited for bloom, HDR, and ambient effects.

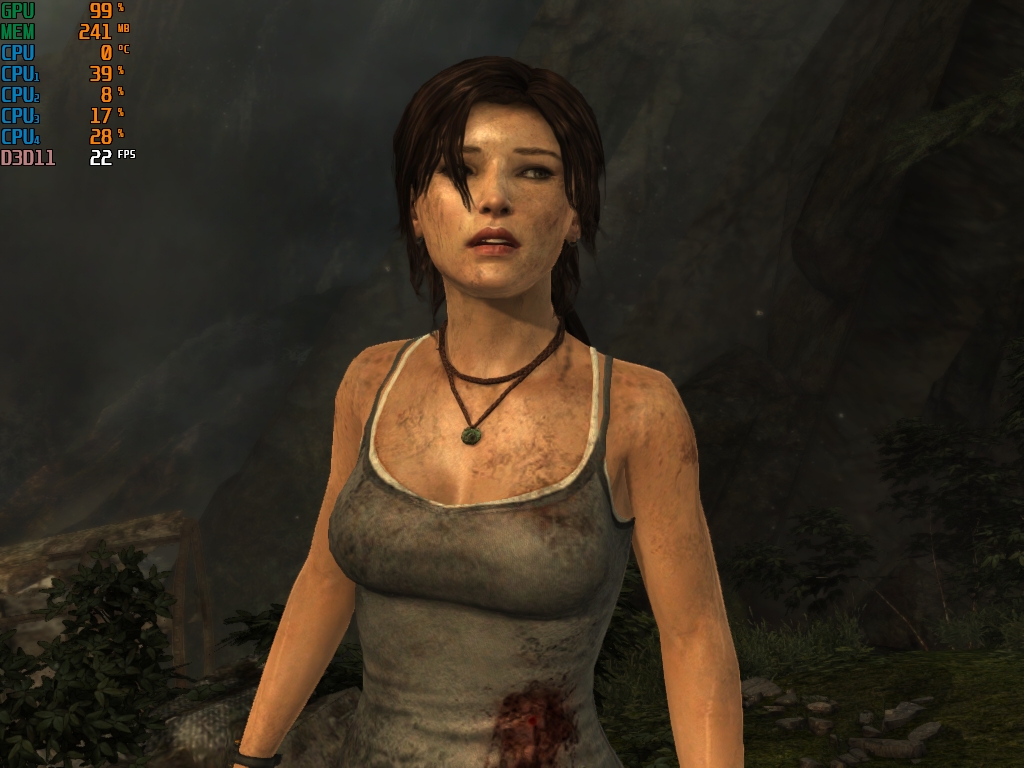

Tomb Raider (2013)

Game Overview:

Released in March 2013, Tomb Raider marked a gritty reboot for the franchise, developed by Crystal Dynamics and powered by the Foundation Engine. It follows a young Lara Croft’s harrowing origin story on the mysterious island of Yamatai, blending cinematic storytelling with survival mechanics, exploration, and third-person combat. The engine supports DirectX 9 and 11, with advanced features like TressFX hair physics and tessellation—though these push beyond the capabilities of legacy GPUs. On older hardware like the GeForce 8600GT, the game remains playable in DX9 mode with reduced settings, offering a surprisingly stable experience when paired with Windows 7 and the final WHQL 340.52 driver.

At 1024×768 on Normal settings, the 8600GT manages a modest 23 FPS average, with 1% lows dipping to 18. This configuration includes FXAA and 4x anisotropic filtering, which help smooth edges and textures without overwhelming the GPU.

While not fluid by modern standards, the experience remains surprisingly stable for a legacy card, with only a 5 FPS gap between average and low—suggesting consistent frame pacing e

Compared to the HD2600XT, the 8600GT edges ahead slightly in both average and lows, making it the more reliable choice for cinematic playthroughs at this tier.

Switching to Low settings at 1024×768, the 8600GT stretches its legs. With FXAA and bilinear filtering enabled, the card delivers a 36 FPS average and a 1% low of 28, offering a far smoother experience.

The 8 FPS gap between average and low indicates occasional dips during physics-heavy moments or cutscenes, but overall gameplay remains responsive and playable.

Against the HD2600XT, the 8600GT again leads by a few frames, reinforcing its strength in maintaining frame stability under reduced visual load.

Synthetic Benchmarks

The card was put through my usual suite of synthetic benchmarks with the following results:

3d Mark 2000

3d Mark 2001 SE

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 35,362 | 29,282 | 30,145 |

| Fill Rate (Single Texturing) | 2,445.8 | 2,271.4 | 2,264.9 |

| Fill Rate (Multi-Texturing) | 8,120.6 | 5,325.1 | 6,115.3 |

A comfortable win for the Nvidia card but the single texturing performance from the Lenovo card is better when overclocked.

This is somewhat counter-intuitive considering the ATI card has half the texture mapping units (8 compared to 16) but this result is repeated in later tests.

The ATi Architecture must be more efficient in this type of test.

3d Mark 2003

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 16848 | 13093 | 12,896 |

| Fill Rate (Single Texturing) | 2125.4 | 2026.30 | 1997.2 |

| Fill Rate (Multi-Texturing) | 7752.20 | 5251.50 | 5999.8 |

| Pixel Shader 2.0 | 220.1 | 123.0 | 112.1 |

A win for the 8600GT on all metrics

3d Mark 2006

In comparison the the HD2600XT we see a clear win for the 8600GT again but only marginally with the SM3.0 tests.

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 7261 | 5908 | 6072 |

| Shader Model 2.0 Score | 2733 | 1850 | 1831 |

| HDR/Shader Model 3.0 Score | 2685 | 2531 | 2635 |

Unigine Sanctuary

Another clean sweep by the Nvidia Card showing much better performance than the two HD2600XT models.

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 2194 | 1604 | 1615 |

| Average FPS | 51.7 | 37.8 | 38.1 |

| Min FPS | 34.5 | 27.9 | 29 |

| Max FPS | 66 | 49.6 | 51 |

Temperature & Fan Speed

With fresh thermal paste this card tops out at 75% heat after a 10 minutes of running at full load, so definitely hotter than the HD2600XT tested before, with this also, even at this temperature the fan refused to increase in speed above 40%.

The case is reasonably well ventilated (though perhaps not to modern standards) with a 120mm case fan blowing in from the side panel over the GPU, another 120mm fan on the front and an 80mm exhaust fan.

DirectX 10 Testing

DirectX 9 dominated PC gaming for nearly a decade. Launched in 2002, it became the standard thanks to broad compatibility with Windows XP, which remained popular well into the 2010s.

In contrast, DirectX 10 debuted as a Vista-exclusive, an operating system many gamers avoided, making adoption slow.

Developers stuck with DX9 to maintain parity with consoles like the Xbox 360 and to avoid rebuilding engines from scratch, since DX10 introduced a new driver model and lacked backward compatibility.

Game engines such as Unreal Engine 3 and Source were deeply optimized for DX9, and rewriting them was costly.

Even as late as 2011, a large portion of gamers still used DX9 hardware, making it the safer choice.

We should still have a look at a few tests, to see how well these early unified-shader cards cope, with the new technology at the time.

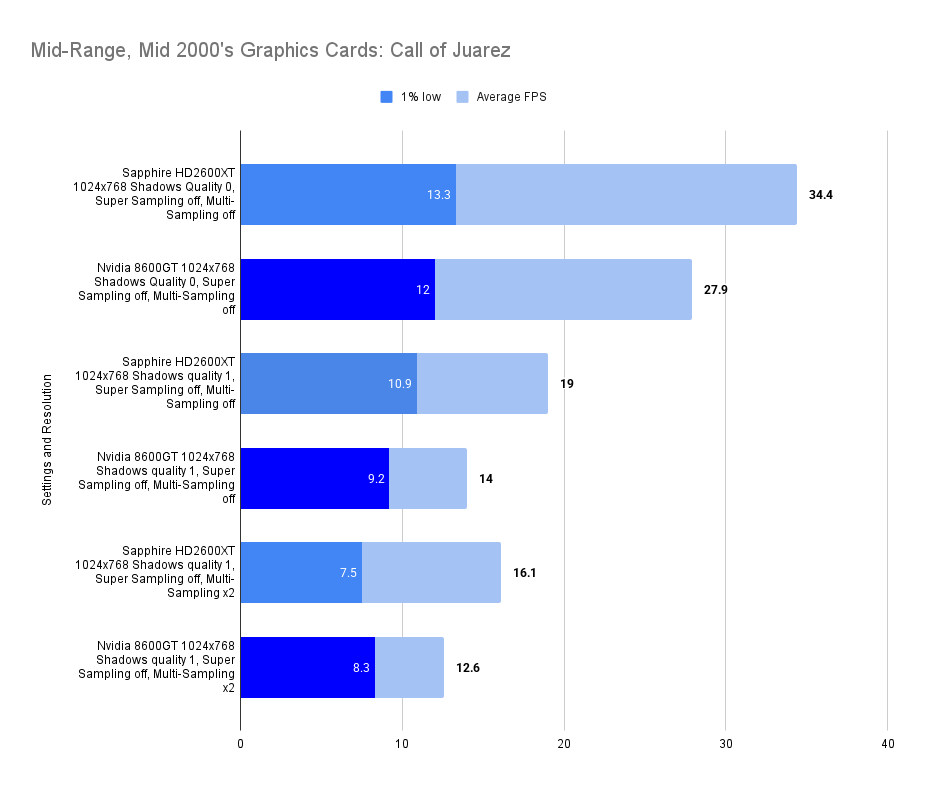

Call of Juarez (2006)

Call of Juarez launched in June 2006, developed by Techland and powered by the Chrome Engine 3. It’s a first-person shooter set in the American Wild West, blending gritty gunfights with biblical overtones and dual protagonists: Reverend Ray, a gun-slinging preacher, and Billy Candle, a fugitive accused of murder. The game alternates between stealth and action, with period-authentic weapons, horseback riding, and stylized sepia-toned visuals.

Built for DirectX 9.0c and optionally DX10, the Chrome Engine delivers dynamic lighting, HDR effects, and physics-driven interactions.

I only have the GOG version of the game which will only run in DX10 (the DX9 executable seems to be missing).

Performance Notes:

A very similar and underwhelming result for both cards here, the 8600GT generally loses out to the Sapphire card on average framerate.

In a few instances the minimum frames are better with Nvidia, but there is no huge difference in reality.

Neither card would be a good pick to play this game on.

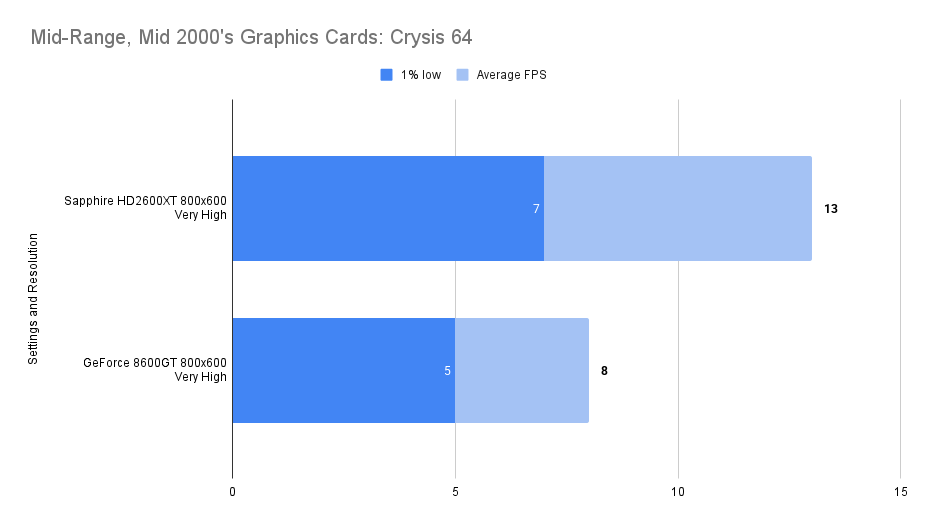

Crysis 64Bit

All you need to do to play DX10 Crysis is to run it on a 64bit operating system, the DX10 enabled version of the game begins.

Research suggests that everything needs to be switched onto ‘very high’ to get the actual DX10 experience though, posing something of a problem as the we saw these cards struggle, even at lower settings

Performance Notes:

At 800×600 with Very High settings, Crysis becomes a brutal stress test for both cards. The 8600GT delivers an average of 8 FPS, with 1% lows dipping to 5 FPS, resulting in frequent stutter and input lag during combat.

The HD2600XT, while also VRAM-bound, manages a slightly smoother ride with 13 FPS average and 7 FPS lows.

Both cards render the full DX10 feature set, soft shadows, volumetric fog and parallax occlusion

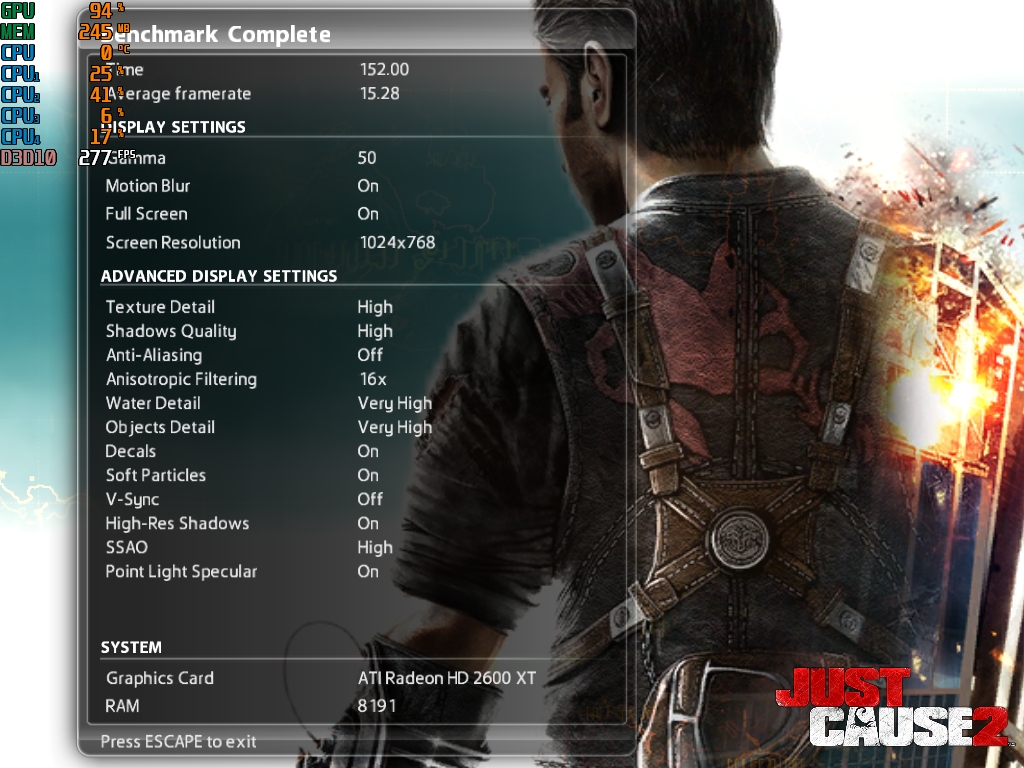

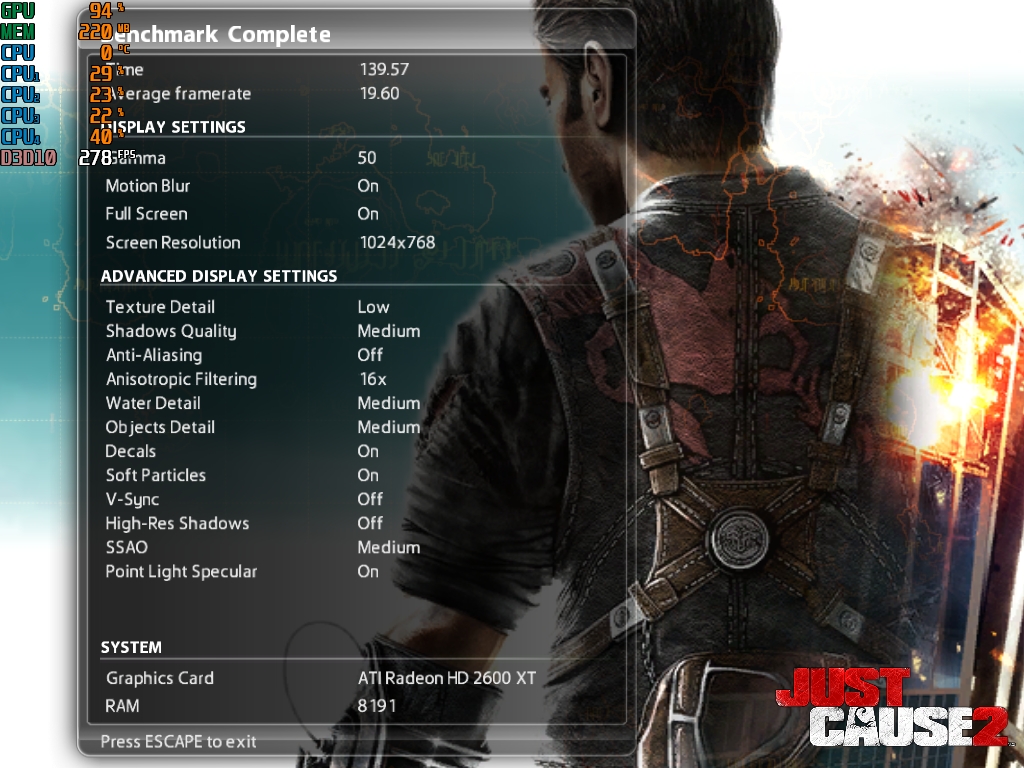

Just Cause 2

Just Cause 2 launched in March 2010, developed by Avalanche Studios using the Avalanche Engine 2.0. Set in the tropical nation of Panau, it’s an open-world action game starring Rico Rodriguez, whose grappling hook and parachute enable chaotic stunts and explosive sabotage.

The previous games were DirectX9 games with bits of DirectX 10 possible.

Just Cause 2 was built for DirectX 10, the game features vast draw distances, dynamic weather, and physics-driven destruction, pushing GPU limits with cinematic flair and sandbox freedom

Performance Notes:

In the Dark Tower benchmark, both cards deliver a surprisingly close race. At High settings, the HD2600XT edges ahead by a fraction, but the 8600GT flips the script at Medium, pulling slightly ahead with 19.92 FPS versus the HD2600XT’s 19.60. The margin of difference is negligible, suggesting both cards are operating at the edge of their fill rate and shader throughput limits.

3d Mark Vantage

The 3d mark version that was made for DX10 and Vista. I ran this on the Entry preset and disabled the CPU tests

| Nvidia GeForce 8600GT | Sapphire HD2600XT | Lenovo HD2600XT | |

| GPU Score | 8201 | 7900 | 7226 |

The results show a win for the 8600GT but with reasonably small margin, at least compared to some of the earlier tests in the suite.

Summary and Conclusions

There we go then, the GeForce 8600GT, I think there’s enough evidence to declare this a reasonable choice for Direct X 9.0 games and earlier.

The card has been completely stable from the moment it was dropped in, all games run without issue, driver installation was no problem.

It doesn’t seem strong enough for later Direct X 10 titles however and I don’t think additional VRAM will solve all of the problems – though a 512Mb model would certainly help with some of them.

The card does run a little hot, though 75 degrees isn’t a huge issue, the fan not going above 40 percent is strange, I would definitely steer clear of passively cooled models if possible, but that’s generally good advice for any card of this era.

It is certainly the better option over the competing HD2600XT from Ati, beating it in the majority of benchmarks and where the Ati card steals a win, it’s never by a significant margin.

Prices are low, it requires no external power, it supports SLI, they look good – plenty of reasons to add one to your collection.

There are actually still a huge amount of articles out there covering different 8600GT models from when they released, just a few here:

https://www.trustedreviews.com/reviews/xfx-fatal1ty-8600-gt

https://www.overclockersclub.com/reviews/gbyte_86_gts_silent/

https://www.pcstats.com/articles/2146/index.html

Leave a comment