- RRP: £90

- Release date: June 28 2007

- Purchased in December 2024

- Purchase Price: £14.95 delivered

Introduction – The HD2000 Series

From Fixed-Function to Unified Shaders

In the summer of 2007, ATI unveiled the RV600 series, known as the Radeon HD 2000 series.

These new GPUs were built on a new architecture called TeraScale 1 and marked a radical shift in GPU design, moving away from previous generations’ fixed-function pipelines which could be found on all cards back to the very first 3d accellerators.

Individual vertex and pixel shaders were replaced by a unified shader model in which a pool of stream processors could handle vertex, pixel, and geometry shading interchangeably.

This series of graphics cards was not ATi’s first foray into the world of unified shaders as they had experience developing the chip contained within the Xbox 360 Console, with a GPU called Xenos.

The RV600 series directly competed with Nvidia’s G80 architecture from the GeForce 8 series which also utilised unified shaders and was released half a year earlier in November 2006.

ATI vs Nvidia: Shader Efficiency and Design

The Unified shader architectures allowed both ATI and Nvidia to assign processing power where needed which was a huge advance over earlier designs. The two companies approached the challenge differently:

- ATI TeraScale/HD2000: Implemented fewer, but wider SIMD shader units. Each ATI “stream processor” was highly efficient, able to parallelize workloads better for certain compute tasks, meaning ATI cards often delivered higher performance-per-watt than their Nvidia rivals of the time. For example, the HD 2600 variants had 120 stream processors; the HD 2900 XT had 320. The architecture’s ability to assign any shader processor to any task, and its optimizations for efficiency, sometimes resulted in superior performance in shader-heavy applications despite lower raw hardware counts.

- Nvidia Tesla/G80: Focused on having more individual shader units, but each was less wide and specialized than ATI’s. This approach led to higher raw peak throughput but could result in lower efficiency under real-world loads, especially those requiring extensive parallelism. Nvidia’s design sometimes excelled in brute-force scenarios but could lag in efficiency per shader, particularly in DirectX 10 titles at the time.

Think of ATI shader units as crew teams working together handling multiple pixels simultaneously. Nvidia shader units for comparison, are individual workers with superhuman strength.

Comparing the actual numbers of shader units is like comparing hours worked by teams vs hours worked by individuals, numbers alone don’t tell the whole story without knowing the size and power of each worker or team.

In summary, the ATi cards sound better with their vast numbers of shader units but this doesn’t always translate into better performance.

Shader Handling: Games and Compatibility

Unified shader cards transformed gaming visuals in DirectX 10 titles which were incorporated into Windows Vista and later.

They allowed dynamic assignment of computing resources, boosting flexibility and performance in modern games that were built for these new capabilities.

Performance and image quality improved, especially in games leveraging Shader Model 4.0 features like geometry shaders and high dynamic range processing.

However, adoption was gradual, and new cards faced mixed results until the software caught up.

Many popular games during the HD 2000 era (and later) still targeted DirectX 9 and Windows XP. Here, performance wasn’t always optimal, in some cases, HD 2000 cards were outperformed by older hardware more tailored for legacy APIs, especially before drivers matured.

ATI’s HD2000 Lineup Overview

With codenames like RV610, RV630, and R600, the HD2000 series spanned three performance tiers:

- RV610 (HD 2400): Designed for budget systems, featuring efficient power use and enhanced media playback through its on-die Unified Video Decoder (UVD).

- RV630 (HD 2600): Struck a balance between gaming and multimedia, incorporating both performance and tuning for video decoding.

- R600 (HD 2900): The flagship, built for raw power, equipped with a massive 512-bit memory bus and workstation-class ambitions, though it notably omitted dedicated hardware UVD.

Despite impressive specs, especially on the HD 2900 XT, the generation was a mixed bag for enthusiasts.

Driver immaturity and lack of hardware video decode (in the high-end model) tempered excitement in early months.

The Sapphire HD2600XT

I couldn’t help but buy this one when I saw it on Ebay, it as shame there was no box or anything, in fact this came through the letterbox in a jiffy bag, so only a thin layer of bubble wrap to protect it, P&P was free but really… come on.

It was completely packed full of dust on arrive so I stripped it down, cleared it out and repasted it which is always a satisfying experience.

It worked flawlessly though, no issues at all with installation and setup. I downloaded the latest driver from the AMD website – Catalyst 13.4 (13.9 for the Win7 testing later). I used DDU to clear out the older driver in safe mode, easily done once you find a version that works in XP (for me that is version 17.0.8.6).

As you can see from the connector at the top, this card is CrossFire capable also so I’m keeping my eye out for another to run alongside it in the same system.

The short table of HD2000 series cards is below, this Sapphire card is highlighted along with the Lenovo version previously tested and reference figures after.

Looks wise, The Sapphire card is great. I love Sapphire cards from this era, they’re always up there with the best looking of the ATi options. and this one is no exception:

Here’s GPU’z screenshot of this Sapphire Card:

The Test System

I use the Phenom II X4 for XP testing, not the most powerful XP processor but its 3.2Ghz clock speed should be more than enough to get the most out of mid-2000s games, even if all four of its cores are unlikely to be utilised.

The full details of the test system:

- CPU: AMD Phenom II X4 955 3.2Ghz Black edition

- 8Gb of 1866Mhz DDR3 Memory (showing as 3.25Gb on 32bit Windows XP and 1600Mhz limited by the platform)

- Windows XP (build 2600, Service Pack 3)

- Kingston SATA 240Gb SSD as a primary drive, an AliExpress SATA has the Win7 installation and games on.

- ASRock 960GM-GS3 FX

- The latest supported driver version Catalyst 13.4

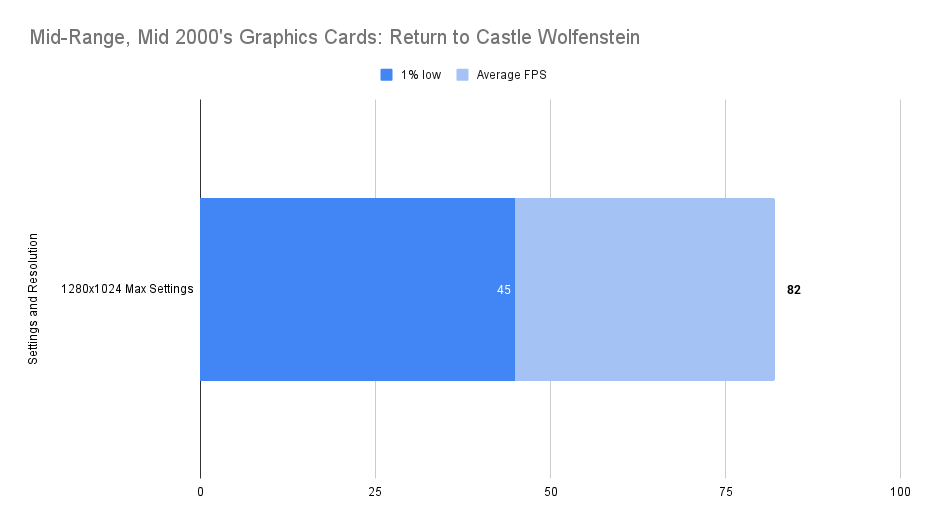

Return to Castle Wolfenstein (2001)

Game Overview:

Return to Castle Wolfenstein launched in November 2001, developed by Gray Matter Interactive and published by Activision.

Built on a heavily modified id Tech 3 engine, it’s a first-person shooter that blends WWII combat with occult horror, secret weapons programs, and Nazi super-soldier experiments.

The game features a linear campaign with stealth elements, fast-paced gunplay, and memorable enemy encounters.

The engine supports DirectX 8.0 and OpenGL, with advanced lighting, skeletal animation, and ragdoll physics.

The Framerate is capped at 92fps

Performance Notes:

It’s a nice smooth experience playing this old title at max settings with everything cranked up to maximum.

I had thought that the 1% low would be up with the average framerate but that wasn’t to be. The game is limited to 92fps so this doesn’t tell the full story, but it’s a good sign of compatibility at least.

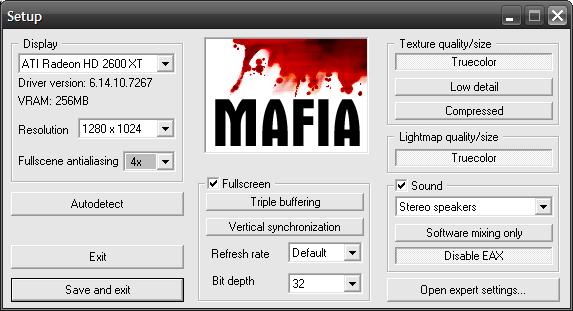

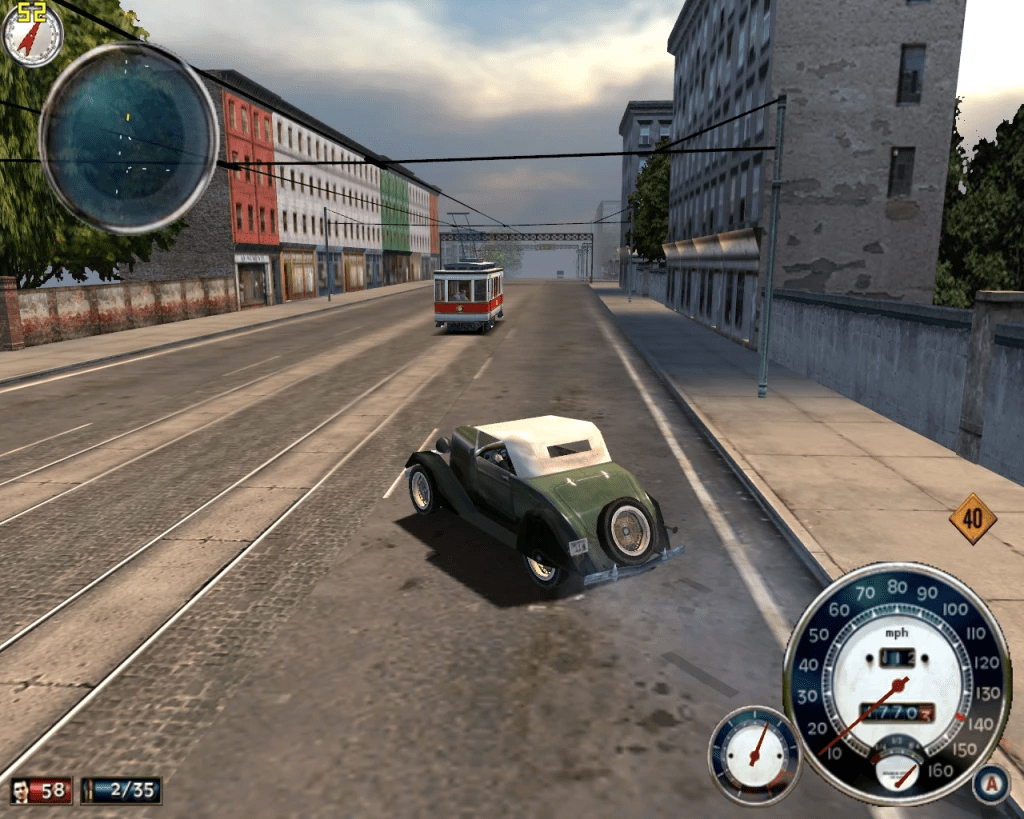

Mafia (2002)

Game Overview:

Mafia launched in August 2002, developed by Illusion Softworks and built on the LS3D engine. It’s a story-driven third-person shooter set in the 1930s, with a focus on cinematic presentation, period-authentic vehicles, and a surprisingly detailed open world for its time. The engine uses DirectX 8.0 and was known for its lighting effects and physics, though it’s a bit temperamental on modern systems.

It’s more of a compatibility test these days than a performance one. The game has a hard frame cap of 62 FPS built into the engine, which makes benchmarking a little odd. Still, it’s a good title to check how older GPUs handle legacy rendering paths and driver quirks.

Performance Notes:

The HD2600XT hits the 62 FPS average cap without issue.

1% low values are a bit lower than expected, especially considering the game’s modest requirements.

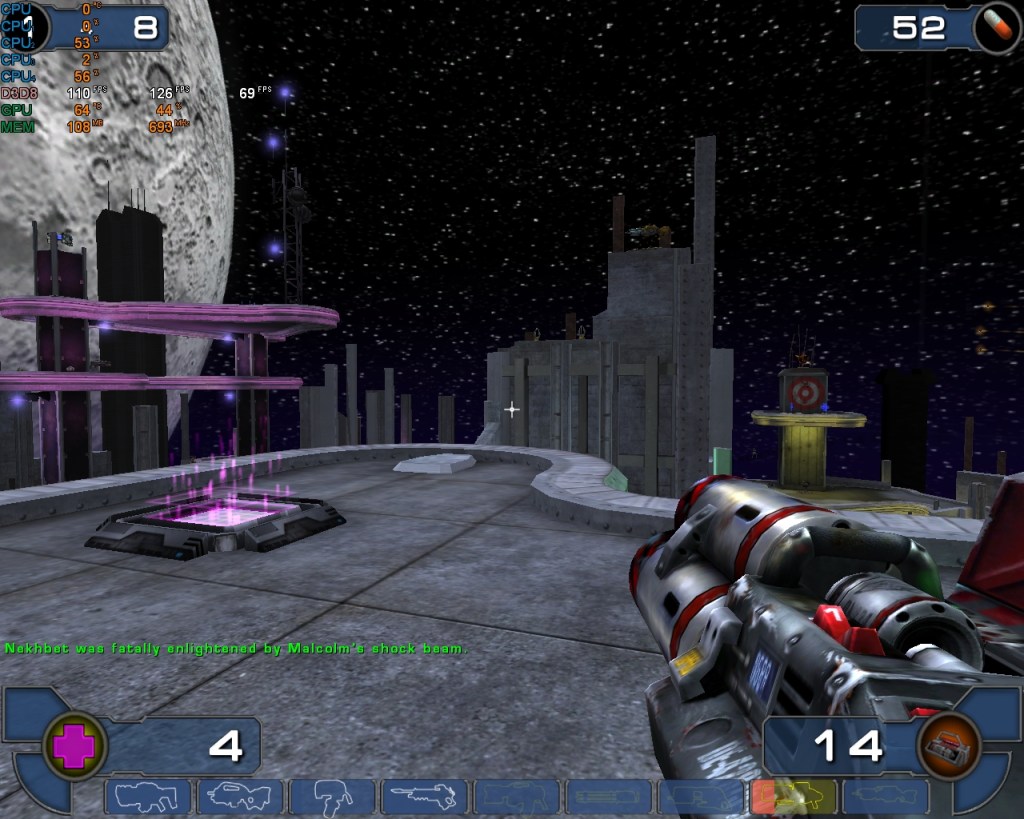

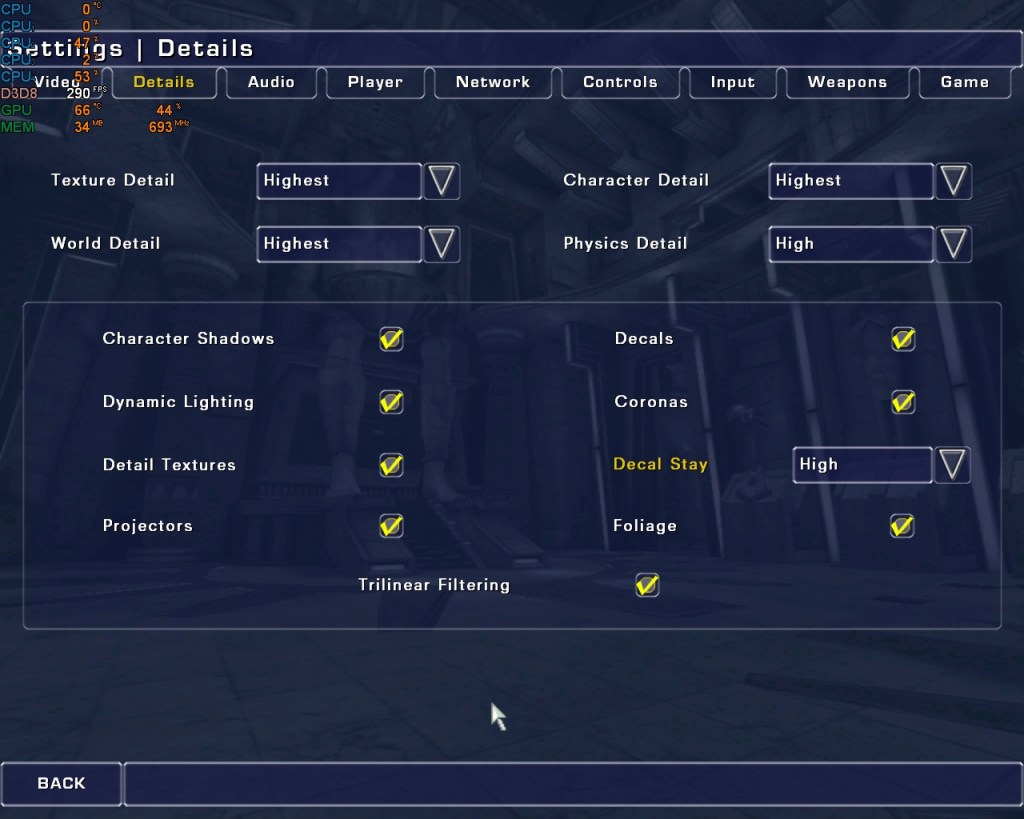

Unreal Tournament 2003 (2002)

Game Overview:

Released in October 2002, Unreal Tournament 2003 was built on the early version of Unreal Engine 2. It was a big leap forward from the original UT, with improved visuals, ragdoll physics, and faster-paced gameplay.

The engine used DirectX 8.1 and introduced support for pixel shaders, dynamic lighting, and high-res textures all of which made it a solid test title for early 2000s hardware.

We played a lot of this and 2004 at LAN parties back in the day.

Still a great game and well worth going back to, even if you’re mostly limited to bot matches these days. There’s even a single-player campaign of sorts, though it’s really just a ladder of bot battles.

The game holds up visually and mechanically, and it’s a good one to throw into the testing suite for older cards. The uncapped frames are pretty useful for my purpouses.

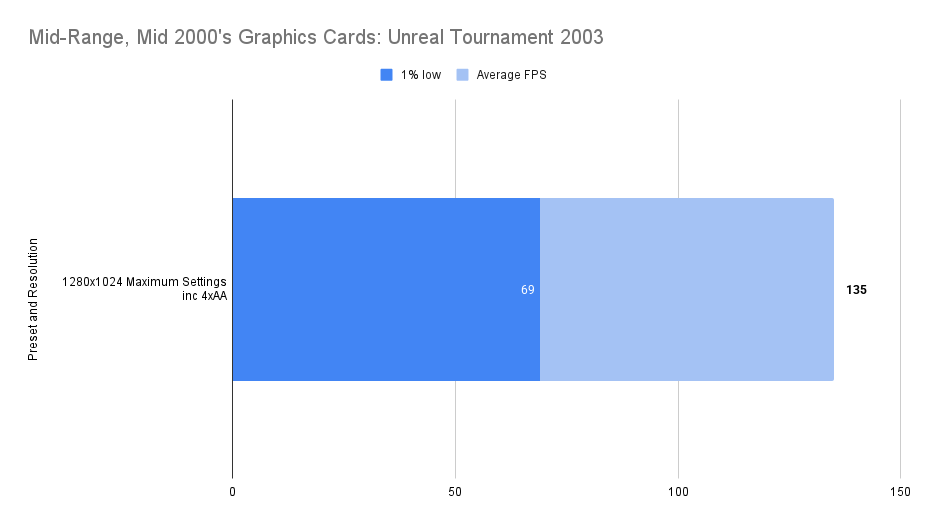

Performance Notes:

This mid-range card from 2007 has absolutely no problem running UT2003.

Average framerate lands comfortably in the triple figures, even with effects and detail settings pushed up.

Gameplay is smooth, responsive, and visually sharp, no stutters, no complaints.

It’s not a stress test for the HD2600XT, but it’s a good compatibility check and a fun one to revisit.

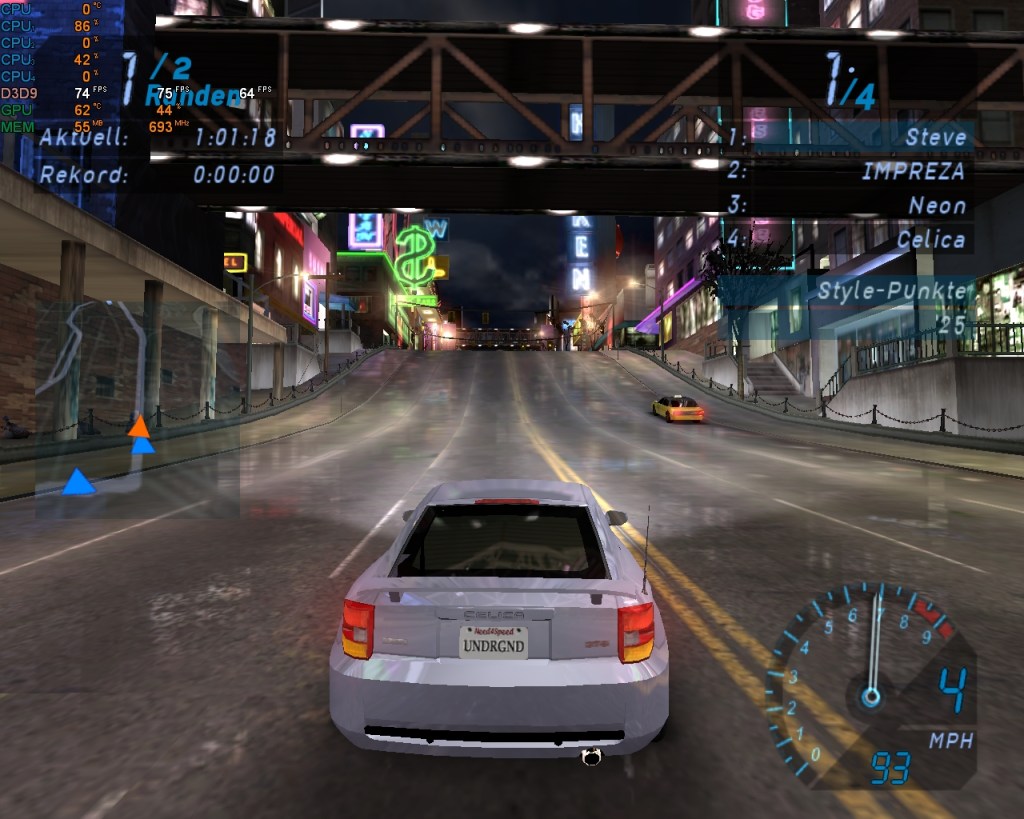

Need for Speed Underground (2003)

Game Overview:

Released in November 2003, Need for Speed: Underground marked a major shift for the series, diving headfirst into tuner culture and neon-lit street racing.

Built on the EAGL engine (version 1), it introduced full car customisation, drift events, and a career mode wrapped in early-2000s flair.

The game runs on DirectX 9 but carries over some quirks from earlier engine builds.

Performance Notes:

It’s a constant pain having performance limited in these earlier titles. Would be great to know how many frames these later cards could throw out, but there are game-engine reasons why they don’t.

75 FPS feels silky smooth though, and the game is every bit as fun as I remember it being back in the day.

No 1% low problem on this one either but driving games seem to have it easier than other titles.

I’ve got the German version of the game, which is a fun thing to deal with sometimes.

Doom 3 (2004)

Game Overview:

Released in August 2004, Doom 3 was built on id Tech 4 and took the series in a darker, slower direction. It’s more horror than run-and-gun, with tight corridors, dynamic shadows, and a heavy focus on atmosphere. The engine introduced unified lighting and per-pixel effects, which made it a demanding title for its time, and still a good one to test mid-2000s hardware.

The game engine is limited to 60 FPS, but it includes an in-game benchmark that can be used for testing that doesn’t have this limit.

Ultra settings are locked unless you’ve got 512 MB of VRAM, so that’s off the table here.

Performance Notes:

42 FPS average at 1280×1024 on High settings with 4×AA, very happy with that.

Smooth enough to play comfortably, and the lighting effects still look great.

FarCry (2004)

Game Overview:

Far Cry launched in March 2004, developed by Crytek and built on the original CryEngine. It was a technical marvel at the time, with massive outdoor environments, dynamic lighting, and advanced AI. The game leaned heavily on pixel shaders and draw distance, making it a solid stress test for mid-2000s GPUs. It also laid the groundwork for what would later become the Crysis legacy.

I’ve have results from this card compared to a few other mid-range options, the older articles need updating, but the numbers below still tell the story.

You’d want to play at 1024 x 768 or higher with these cards and the Sapphire is the faster of the ATi offerings.

The 8600GT is the better option though at this resolution, though the Nvidia cards 1% Low performance falls away significantly at very high settings.

With AA enabled, the Nvidia card remains the best, Sapphire beats Lenovo though not significantly.

At 1280×1024 with Very High settings, the Sapphire 2600XT gives a good account of itself though is beaten handily by the Nvidia competition.

F.E.A.R. (2005)

Game Overview:

F.E.A.R. (First Encounter Assault Recon) launched on October 17, 2005 for Windows, developed by Monolith Productions. Built on the LithTech Jupiter EX engine, it was a technical showcase for dynamic lighting, volumetric effects, and intelligent enemy AI. The game blended tactical shooting with psychological horror, earning acclaim for its eerie atmosphere and cinematic combat.

Performance Notes:

The HD2600XT performs well without Anti-Aliasing enabled, maintaining playable framerates across most scenes.

Enabling Anti-Aliasing requires significantly lower settings to avoid performance drops.

Soft Shadows had minimal impact on average framerate, but introduced inconsistencies — occasional dips and stutters during intense lighting transitions.

Conclusion:

For optimal balance, disabling AA and keeping Soft Shadows off or at low settings provided the smoothest experience. The HD2600XT handled F.E.A.R. admirably for its tier, especially given the game’s demanding lighting and particle effects.

Battlefield 2 (2005)

Game Overview:

Battlefield 2 launched on June 21, 2005, developed by DICE and published by EA. It was a major evolution for the franchise, introducing modern warfare, class-based combat, and large-scale multiplayer battles with up to 64 players. Built on the Refractor 2 engine, it featured dynamic lighting, physics-based ragdolls, and destructible environments that pushed mid-2000s hardware.

Performance Notes

No single-player campaign, but I find bot matches via Instant Action a lot of fun. It was hugely popular in its day, especially for Commander Mode, vehicle combat, and it apparently had a thriving modding scene.

Even 20 years on, Battlefield 2 holds up as a satisfying bot-shooter. The HD2600XT handles it well, especially without AA.

Need for Speed: Carbon (2006)

Up until now I’ve been using Need for Speed: Most Wanted for benchmarking runs, it’s an iconic mid-2000’s version of the game so it made sense.

I never did get on well with the game though, the bloom effects were just two much and it ran like crap on most cards.

For the mid-range I thought I’d switch things up to Need for Speed Carbon, this was released a year later and based on the same game engine as NFS:MW, it does have better compatibility with hardware though and is far better optomised.

It’s also much easier to look at, maybe it’s because it reminds me of NFS:Underground, the one NFS game I played to death at the time.

I’ve given up on the set race idea, instead I’ve been driving around town in open-world mode being chased by the police. It’s a little harder to replicate identical runs admittedly but I’ve been recording for longer to suck in a lot more data.

the HD2600XT performed adequately, Max settings felt fine even at the 1280×1024, better to drop it down a level though to 1024×768.

Medium settings gave a huge average framerate and a good 1% low.

You can forget Anti-Aliasing though on this card, performance plummeted down into unplayable territory. The visuals are a little less jarring without it than in Most Wanted – maybe just because it’s all at night.

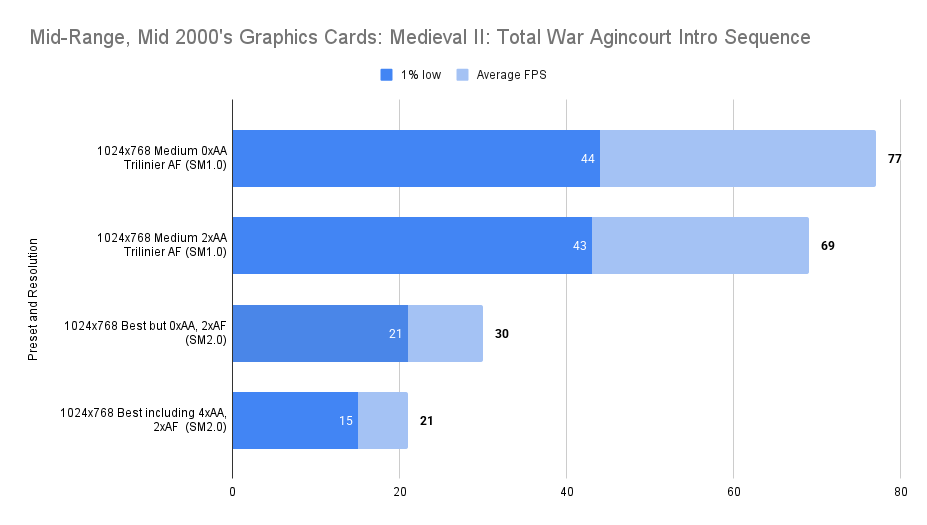

Medieval II: Total War (2006)

Game Overview:

Released on November 10, 2006, Medieval II: Total War was developed by Creative Assembly and published by Sega. It’s the fourth entry in the Total War series, built on the enhanced Total War engine with support for Shader Model 2.0 and 3.0. The game blends turn-based strategy with real-time battles, set during the High Middle Ages, and includes historical scenarios like Agincourt.

Performance Notes:

The Agincourt intro provides a fair and repeatable benchmark, rendering the same scene each time.

Medium settings with 2× Anti-Aliasing delivered solid performance, with smooth playback and consistent framerate. Attempting best settings with Shader Model 2.0 proved unstable or unplayable.

If any game is going to push a Phenom II that won’t even be released for four years after the game release, it is this one though, something I do worry about.

Test Drive Unlimited (2006)

I always have fun playing this one, I certainly don’t miss the voice acting in the NFS series.. those street racers need to take themselves a little less seriously.

It looks great as as well, the HD2600XT does a pretty good job at Max settings but again, Anti-Aliasing tanks the framerate – it needs to stay off.

Not bad then at 1024×768 Max settings, shame about AA, cleaning up the lines on the rear of the cars would really improve things.

Oblivion 2006

Another game with test data from some of the competition.

Game Overview:

Oblivion launched on March 20, 2006, developed by Bethesda Game Studios. Built on the Gamebryo engine, it introduced a vast open world, dynamic weather, and real-time lighting. The game was a technical leap for RPGs, with detailed environments and extensive mod support that kept it alive well beyond its release window (it’s just had a re-release recently).

Known for its sprawling world Oblivion remains a benchmark title for mid-2000s hardware. The game’s reliance on draw distance and lighting effects makes GPUs struggle.

Running at 800×600, the Sapphire HD2600XT is now firmly in last place. Not by a huge margin, but enough to notice. The 8600GT holds up better, especially when Anti-Aliasing is switched on — and it really does make a difference in this game. The visuals sharpen up nicely, and the framerate stays respectable.

More strange results for the Sapphire Card but it does not perform well:

The Lenovo card getting the upper hand with it’s 512Mb of VRAM

Conclusion:

The HD2600’s are unable to keep up with the 8600GT but still give playable performance.

Crysis (2007)

Game Overview:

Crysis launched in November 2007 and quickly became the go-to benchmark title for PC gamers. Built on CryEngine 2, it pushed hardware to the limit with massive draw distances, dynamic lighting, destructible environments, and full DirectX 10 support.

The game is just about playable on the HD2600XT and 8600GT under DirectX 9.

‘Very High’ settings are technically selectable, but not on XP. To be tested separately later in the article.

At 800×600, the Sapphire HD2600XT pulls ahead. Not by a huge margin, but a few extra frames per second are always welcome in Crysis.

Same story at 1024×768. I expected the Lenovo card’s extra VRAM to help, but it didn’t. Sapphire beats both.

Anti-Aliasing performance was awful across the board. I didn’t retest — couldn’t face it. Maybe the Lenovo card finally benefits from its higher RAM here, but it’s still not playable.

Trying to hit enemies during a slideshow is a challenge I wouldn’t recommend.

1280×1024? Also unplayable. Sapphire edges up the average framerate by a whole 1%. Woop.

Verdict: Sapphire HD2600XT is the best of them but still a depressing experience.

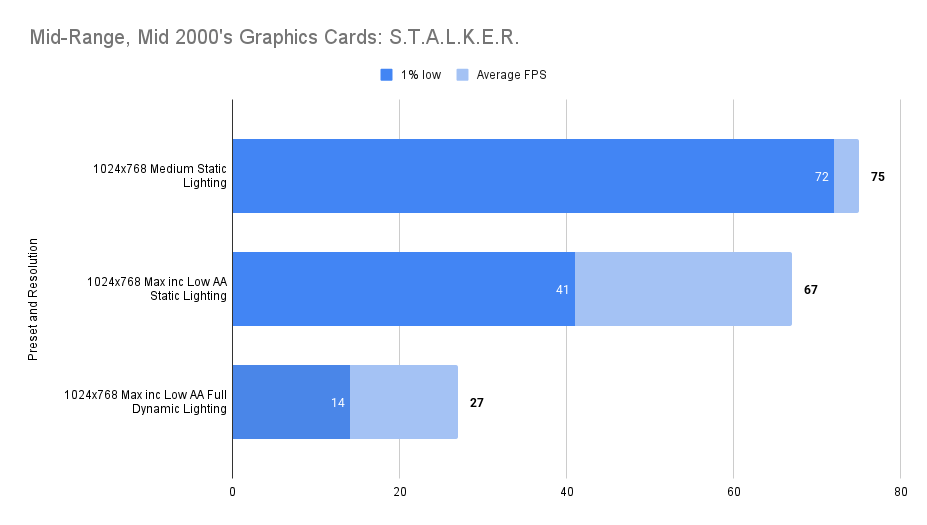

S.T.A.L.K.E.R. (2007)

Game Overview:

Released in March 2007, S.T.A.L.K.E.R.: Shadow of Chernobyl was developed by GSC Game World and runs on the X-Ray engine. It’s a gritty survival shooter set in the Chernobyl Exclusion Zone, blending open-world exploration with horror elements and tactical combat. The engine supports DirectX 8 and 9, with optional dynamic lighting and physics that can push older hardware to its limits.

Performance Notes:

Medium settings under DirectX 9 hit the frame cap easily and deliver smooth gameplay.

Vsync was disabled, but the engine still seems to limit frames to what the monitor can handle, despite the switch being toggled off.

Switching to Maximum settings nudges the AA slider up a notch. Still playable, though not as smooth.

Enabling Dynamic Lighting is where things fall apart, framerate drops to around 27 FPS, and it’s noticeable.

The game remains playable at high settings if you avoid Dynamic Lighting, but it’s clear the HD2600XT is working hard to keep up.

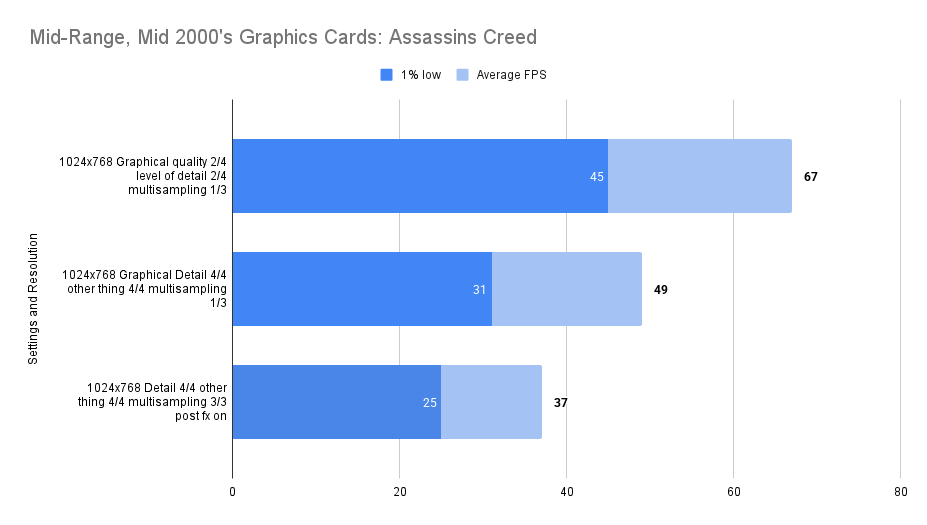

Assassins Creed (2007)

Game Overview:

Assassin’s Creed launched in November 2007, developed by Ubisoft Montreal and built on the Anvil engine. It introduced open-world stealth gameplay, parkour movement, and historical settings wrapped in sci-fi framing. The first entry takes place during the Third Crusade, with cities like Damascus, Acre, and Jerusalem rendered in impressive detail for the time.

Performance Notes:

A tough game for most GPUs, but the HD2600XT does a respectable job keeping the frames flowing at medium settings.

There are no presets as such, just menu options you can tweak in-engine. No restart required, thankfully. I hate games that make you reboot just to change a texture slider.

49 FPS average at 1204×768 with detail on high feels good to play and probably what I’d recommend.

Synthetic Benchmarks

The card was put through my usual suite of synthetic benchmarks (with 3d Mark 2000 just to check DX7 compatibility) with the following results:

3d Mark 2000

3d Mark 2001 SE

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 35,362 | 29,282 | 30,145 |

| Fill Rate (Single Texturing) | 2,445.8 | 2,271.4 | 2,264.9 |

| Fill Rate (Multi-Texturing) | 8,120.6 | 5,325.1 | 6,115.3 |

The Sapphire card seemed to have the advantage on Multi-texturing fill rate.

3d Mark 2003

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 16848 | 13093 | 12896 |

| Fill Rate (Single Texturing) | 2125.4 | 2026.30 | 1997.2 |

| Fill Rate (Multi-Texturing) | 7752.20 | 5251.50 | 5999.8 |

| Pixel Shader 2.0 | 220.1 | 123.0 | 112.1 |

Again with the multi-texturing fill rate advantage with the Sapphire card.

3d Mark 2006

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 7261 | 5908 | 6072 |

| Shader Model 2.0 Score | 2733 | 1850 | 1831 |

| HDR/Shader Model 3.0 Score | 2685 | 2531 | 2635 |

Both HD2600XT cards are around the same in 3d Mark 06

Unigine Sanctuary

| Nvidia 8600GT | Lenovo HD2600XT | Sapphire HD2600XT | |

| Score | 2194 | 1604 | 1615 |

| Average FPS | 51.7 | 37.8 | 38.1 |

| Min FPS | 34.5 | 27.9 | 29 |

| Max FPS | 66 | 49.6 | 51 |

Temperature & Fan Speed

The Sapphire HD2600XT Runs cool and quiet at stock settings, 44% of fan speed at full load keeps things under 62 degrees.

DirectX 10 Testing

DirectX 9 dominated PC gaming for nearly a decade. Launched in 2002, it became the standard thanks to broad compatibility with Windows XP, which remained popular well into the 2010s.

In contrast, DirectX 10 debuted as a Vista-exclusive, an operating system many gamers avoided, making adoption slow.

Developers stuck with DX9 to maintain parity with consoles like the Xbox 360 and to avoid rebuilding engines from scratch, since DX10 introduced a new driver model and lacked backward compatibility.

Game engines such as Unreal Engine 3 and Source were deeply optimized for DX9, and rewriting them was costly.

Even as late as 2011, a large portion of gamers still used DX9 hardware, making it the safer choice.

We should still have a look at a few tests, to see how well these early unified-shader cards cope, with the new technology at the time.

The test system is dual boot so I installed Catalyst 13.9 on the windows 7 drive and ran the below:

Call of Juarez (2006)

Call of Juarez launched in June 2006, developed by Techland and powered by the Chrome Engine 3. It’s a first-person shooter set in the American Wild West, blending gritty gunfights with biblical overtones and dual protagonists: Reverend Ray, a gun-slinging preacher, and Billy Candle, a fugitive accused of murder. The game alternates between stealth and action, with period-authentic weapons, horseback riding, and stylized sepia-toned visuals.

Built for DirectX 9.0c and optionally DX10, the Chrome Engine delivers dynamic lighting, HDR effects, and physics-driven interactions.

I only have the GOG version of the game which will only run in DX10 (the DX9 executable seems to be missing).

Using the inbuilt DirectX 10 benchmark, the following results were recorded:

not great but MSI Afterburner (which seems to be giving believable readings under Win7) confirms that the 256Mb VRAM buffer is completely full, perhaps more VRAM would unlock better performance.

Crysis (64 Bit)

All you need to do to play DX10 Crysis is to run it on a 64bit operating system, the DX10 enabled version of the game begins.

Research suggests that everything needs to be switched onto ‘very high’ to get the actual DX10 experience though, posing something of a problem as the HD2600XT clearly doesn’t have the horsepower for that level of graphics.

Still, it was noticably prettier to look at, I dropped things to 800×600 and recorded the results.

I’m keen to get the 512Mb version of the card playing, the VRAM buffer is saturated so the game is having to use system RAM which is definitely not helping performance.

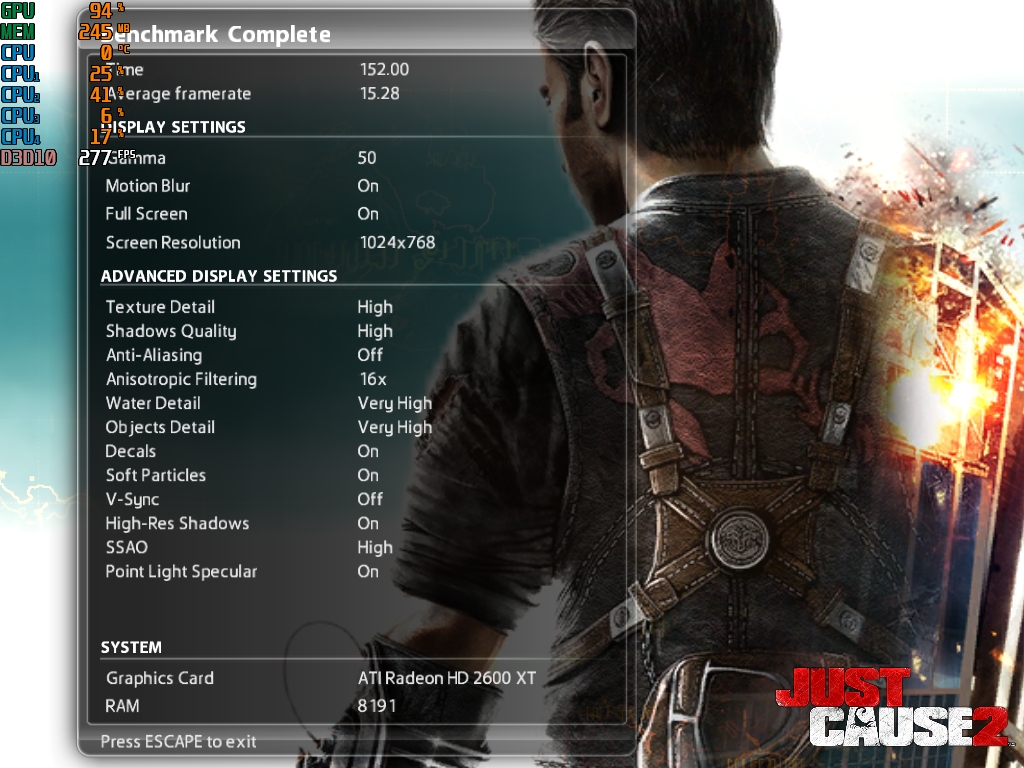

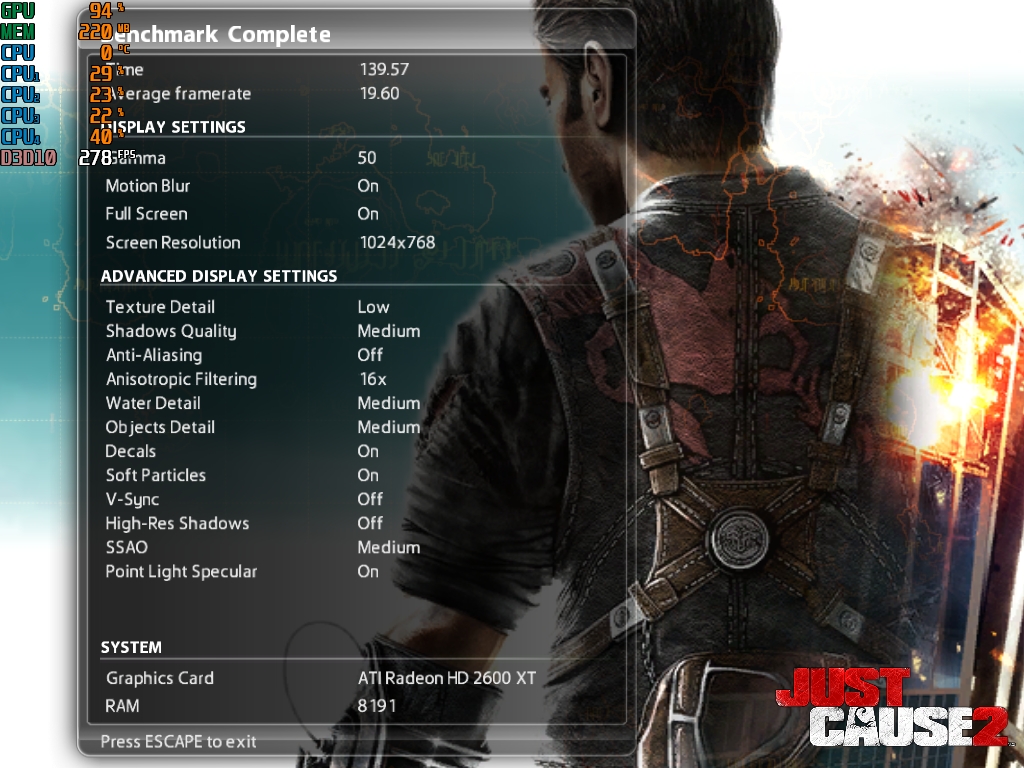

Just Cause 2

Just Cause 2 launched in March 2010, developed by Avalanche Studios using the Avalanche Engine 2.0. Set in the tropical nation of Panau, it’s an open-world action game starring Rico Rodriguez, whose grappling hook and parachute enable chaotic stunts and explosive sabotage.

The previous games were DirectX9 games with bits of DirectX 10 possible.

Just Cause 2 was built for DirectX 10, the game features vast draw distances, dynamic weather, and physics-driven destruction, pushing GPU limits with cinematic flair and sandbox freedom

I ran the Dark tower benchmark with the following results:

3d Mark Vantage

The 3d mark version that was made for DX10 and Vista. I ran this on the Entry preset and disabled the CPU tests

Summary and Conclusions

Well, that was the HD2600XT, quite an in-depth look at the card in the end but it was a pleasure.

This article replaced a previous, much shorter version. The conclusion of that previous article and I summarised that the GPU is a good choice for DirectX9 games.

This summary was called into question by a Vogons member, which is fair enough.

So, is the HD2600XT a good card for DX9 games? well, evidence suggests that it’s certainly not a bad one. It was mid-range at the time and it plays mid-2000 games at a mid sort of level.

Definite advantages are that the card stays cool, it doesn’t require external power, it’s quiet and there is plentiful supply on ebay right now.

I haven’t tried to overclock the card, with the low temperatures there clearly is headroom. I’m not big into overclocking these relics though, I do like to have a working collection of graphics cards.

The only thing still to question then is performance. Relative to both the earlier unified shader competition and also the fixed pipeline cards that came before.

The current Nvidia GeForce 8600GT card has an article written which will get this same treatment. It was clear from the limited testing so far that it is a much more capable card than this HD2600XT so likely the recommendation over the two. I’m kind of fond of that card also if I’m honest.

Though if performance was the factor driving the purchase, then why look at either? a GTX750Ti seems to be a fan favourite, far newer, far better performance, low power, supported by XP. It does everything that these earlier cards do but better.

So then, should you buy a HD2600XT? Well if you want one, then yes! If you don’t want one though, my advice is stay clear.

Glad I spent these last few weeks benchmarking, researching and writing to come to that useful conclusion for you all 😉

Ash

https://www.hexus.net/tech/reviews/graphics/9957-radeon-hd-2600-xt-shootout-gecube-v-sapphire/

https://bit-tech.net/reviews/tech/graphics/radeon_hd_2600_xt_vs_geforce_8600_gt/1/

Leave a comment